Geometric Deep Learning

Grids, Groups, Graphs,

Geodesics, and Gauges

March 4, 2025

1 Imperial College London / USI IDSIA / Twitter

2 New York University

3 Qualcomm AI Research. Qualcomm AI Research is an initiative of Qualcomm Technologies, Inc.

4 DeepMind

Contents

Preface

For nearly two millenia since Euclid’s Elements, the word

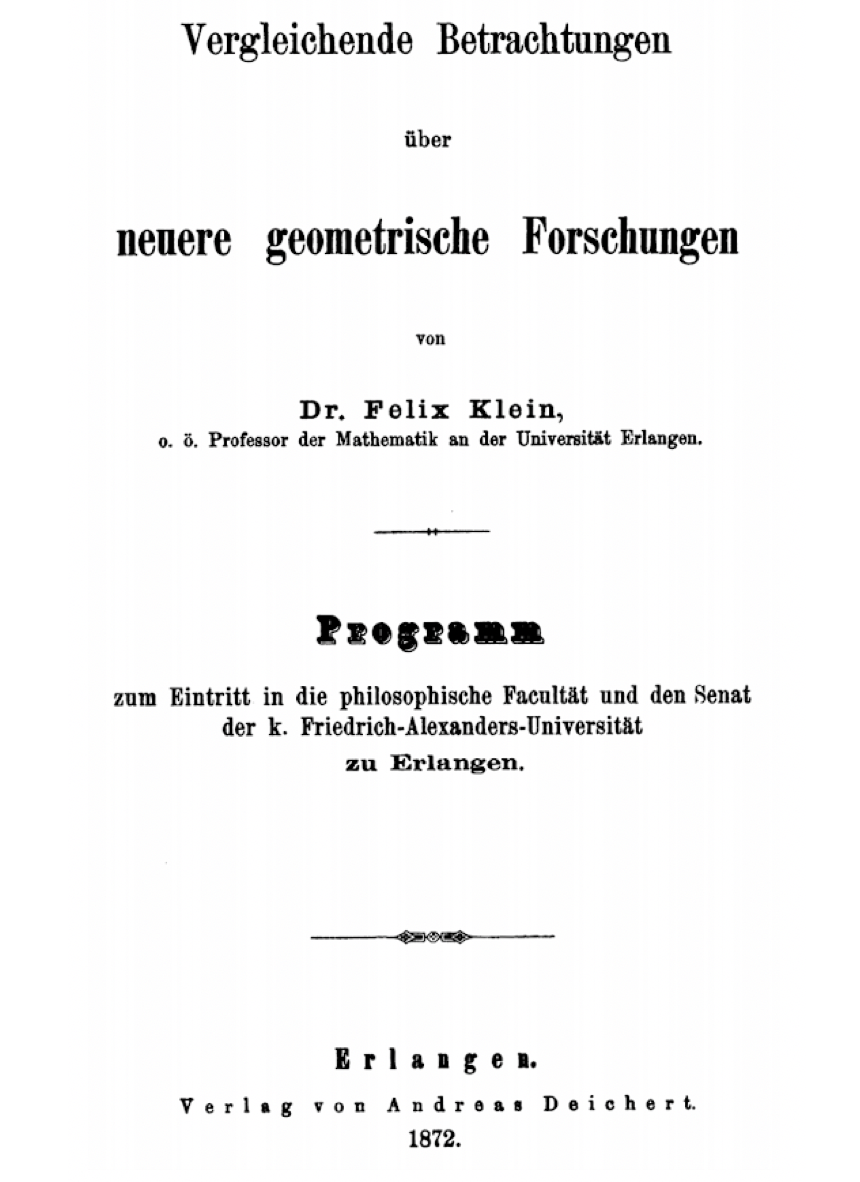

According to a popular belief, the Erlangen Programme was delivered in Klein’s inaugural address in October 1872. Klein indeed gave such a talk (though on December 7 of the same year), but it was for a non-mathematical audience and concerned

primarily his ideas of mathematical education. What is now called the ‘Erlangen Programme’ was actually a research prospectus brochure Vergleichende Betrachtungen über neuere geometrische Forschungen (“A comparative review of recent researches

in geometry”) he prepared as part of his professor appointment. See ?. ‘geometry’ has been synonymous with Euclidean geometry, as no other types of geometry existed. Euclid’s monopoly came to an end in the nineteenth century, with

examples of non-Euclidean geometries constructed by Lobachevesky, Bolyai, Gauss, and Riemann. Towards the end of that century, these studies had diverged into disparate fields, with mathematicians and philosophers debating the validity of and

relations between these geometries as well as the nature of the “one true geometry”.

According to a popular belief, the Erlangen Programme was delivered in Klein’s inaugural address in October 1872. Klein indeed gave such a talk (though on December 7 of the same year), but it was for a non-mathematical audience and concerned

primarily his ideas of mathematical education. What is now called the ‘Erlangen Programme’ was actually a research prospectus brochure Vergleichende Betrachtungen über neuere geometrische Forschungen (“A comparative review of recent researches

in geometry”) he prepared as part of his professor appointment. See ?. ‘geometry’ has been synonymous with Euclidean geometry, as no other types of geometry existed. Euclid’s monopoly came to an end in the nineteenth century, with

examples of non-Euclidean geometries constructed by Lobachevesky, Bolyai, Gauss, and Riemann. Towards the end of that century, these studies had diverged into disparate fields, with mathematicians and philosophers debating the validity of and

relations between these geometries as well as the nature of the “one true geometry”.

A way out of this pickle was shown by a young mathematician Felix Klein, appointed in 1872 as professor in the small Bavarian University of Erlangen. In a research prospectus, which entered the annals of mathematics as the Erlangen Programme, Klein proposed approaching geometry as the study of invariants, i.e. properties unchanged under some class of transformations, called the symmetries of the geometry. This approach created clarity by showing that various geometries known at the time could be defined by an appropriate choice of symmetry transformations, formalized using the language of group theory. For instance, Euclidean geometry is concerned with lengths and angles, because these properties are preserved by the group of Euclidean transformations (rotations and translations), while affine geometry studies parallelism, which is preserved by the group of affine transformations. The relation between these geometries is immediately apparent when considering the respective groups, because the Euclidean group is a subgroup of the affine group, which in turn is a subgroup of the group of projective transformations.

The impact of the Erlangen Programme on geometry was very profound. Furthermore, it spilled to other fields, especially physics, where symmetry principles allowed to derive conservation laws from first principles of symmetry (an astonishing result known as Noether’s Theorem), and even enabled the classification of elementary particles as irreducible representations of the symmetry group. Category theory, now pervasive in pure mathematics, can be “regarded as a continuation of the Klein Erlangen Programme, in the sense that a geometrical space with its group of transformations is generalized to a category with its algebra of mappings”, in the words of its creators Samuel Eilenber and Saunders Mac Lane. See ?.

At the time of writing, the state of the field of deep learning is somewhat reminiscent of the field of geometry in the nineteenth century. There is a veritable zoo of neural network architectures for various kinds of data, but few unifying principles. As in times past, this makes it difficult to understand the relations between various methods, inevitably resulting in the reinvention and re-branding of the same concepts in different application domains. For a novice trying to learn the field, absorbing the sheer volume of redundant ideas is a true nightmare.

In this text, we make a modest attempt to apply the Erlangen Programme mindset to the domain of deep learning, with the ultimate goal of obtaining a systematisation of this field and ‘connecting the dots’. We call this geometrisation attempt ‘Geometric Deep Learning’, and true to the spirit of Felix Klein, propose to derive different inductive biases and network architectures implementing them from first principles of symmetry and invariance. In particular, we focus on a large class of neural networks designed for analysing unstructured sets, grids, graphs, and manifolds, and show that they can be understood in a unified manner as methods that respect the structure and symmetries of these domains.

We believe this text would appeal to a broad audience of deep learning researchers, practitioners, and enthusiasts. A novice may use it as an overview and introduction to Geometric Deep Learning. A seasoned deep learning expert may discover new ways of deriving familiar architectures from basic principles and perhaps some surprising connections. Practitioners may get new insights on how to solve problems in their respective fields.

With such a fast-paced field as modern machine learning, the risk of writing a text like this is that it becomes obsolete and irrelevant before it sees the light of day. Having focused on foundations, our hope is that the key concepts we discuss will transcend their specific realisations“The knowledge of certain principles easily compensates the lack of knowledge of certain facts.” (?) — or, as Claude Adrien Helvétius put it, “la connaissance de certains principes supplée facilement à la connoissance de certains faits.”

Notation

| \(\Omega ,u\) |

Domain, point on domain |

| \(x(u) \in \mathcal {X}(\Omega ,\mathcal {C})\) |

Signal on the domain of the form \(x:\Omega \rightarrow \mathcal {C}\) |

| \(f(x) \in \mathcal {F}(\mathcal {X}(\Omega ))\) |

Functions on signals on the domain of the form \(f:\mathcal {X}(\Omega ) \rightarrow \mathcal {Y}\) |

| \(\fG ,\fg \) |

Group, element of the group |

| \(\fg .u, \rho (\fg )\) |

Group action, group representation |

| \(\vec {X}\in \mathcal {C}^{|\Omega |\times s}\) |

Matrix representing a signal on a discrete domain |

| \(\vec {x}_u\in \mathcal {C}^{s}\) |

Vector representing a discrete domain signal \(\vec {X}\) on element \(u\in \Omega \) |

| \(x_{uj}\in \mathcal {C}\) |

Scalar representing the \(j\)th component of a discrete domain signal \(\vec {X}\) on element \(u\in \Omega \) |

| \(\vec {F}(\vec {X})\) |

Function on discrete domain signals that returns another discrete domain signal, as a matrix |

| \(\tau :\Omega \rightarrow \Omega \) |

Automorphism of the domain |

| \(\eta :\Omega \rightarrow \Omega '\) |

Isomorphism between two different domains |

| \(\sigma : \mathcal {C}\rightarrow \mathcal {C}'\) |

Activation function (point-wise non-linearity) |

| \(G=(\mathcal {V},\mathcal {E})\) |

Graph with nodes \(\mathcal {V}\) and edges \(\mathcal {E}\) |

| \(\mathcal {T}=(\mathcal {V},\mathcal {E},\mathcal {F})\) |

Mesh with nodes \(\mathcal {V}\), edges \(\mathcal {E}\), and faces \(\mathcal {F}\) |

| \(x\star \theta \) |

Convolution with filter \(\theta \) |

| \(S_v\) |

Shift operator |

| \(\varphi _i\) |

Basis function |

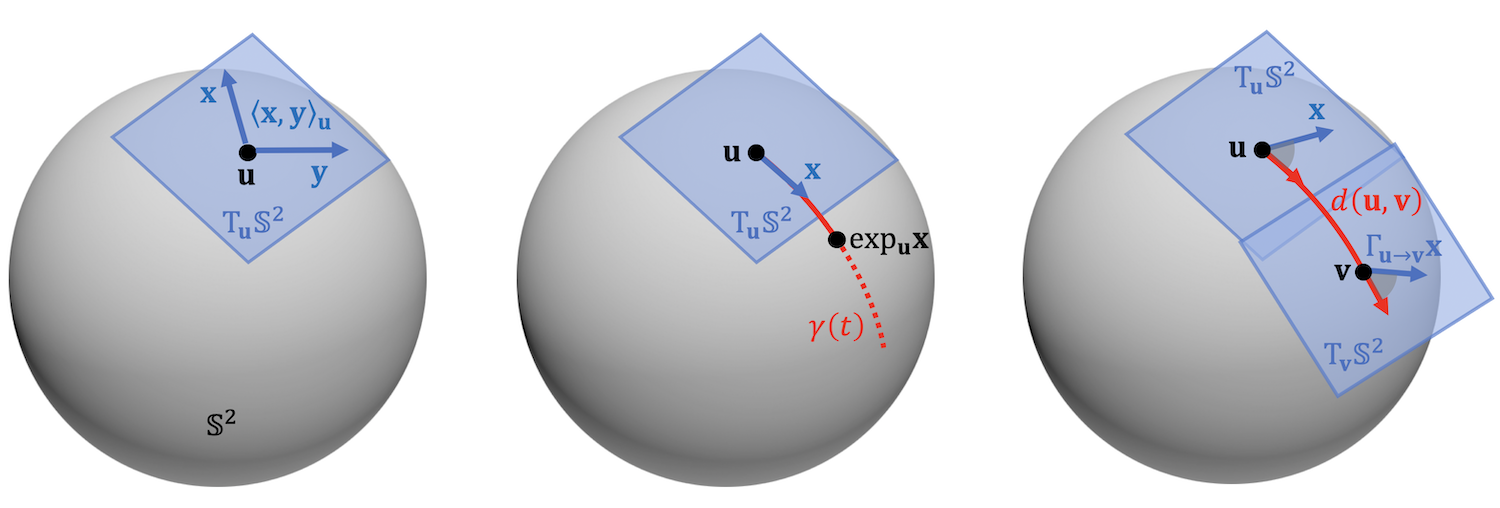

| \(T_u\Omega , T\Omega \) |

Tangent space at \(u\), tangent bundle |

| \(X \in T_u\Omega \) |

Tangent vector |

| \(g_u(X,Y) = \langle X, Y\rangle _u\) |

Riemannian metric |

| \(\ell (\gamma ), \ell _{uv}\) |

Length of a curve \(\gamma \), discrete metric on edge \((u,v)\) |

1 Introduction

The last decade has witnessed an experimental revolution in data science and machine learning, epitomised by deep learning methods. Indeed, many high-dimensional learning tasks previously thought to be beyond reach – such as computer vision, playing Go, or protein folding – are in fact feasible with appropriate computational scale. Remarkably, the essence of deep learning is built from two simple algorithmic principles: first, the notion of representation or feature learning, whereby adapted, often hierarchical, features capture the appropriate notion of regularity for each task, and second, learning by local gradient-descent, typically implemented as backpropagation.

While learning generic functions in high dimensions is a cursed estimation problem, most tasks of interest are not generic, and come with essential pre-defined regularities arising from the underlying low-dimensionality and structure of the physical world. This text is concerned with exposing these regularities through unified geometric principles that can be applied throughout a wide spectrum of applications.

Exploiting the known symmetries of a large system is a powerful and classical remedy against the curse of dimensionality, and forms the basis of most physical theories. Deep learning systems are no exception, and since the early days researchers have adapted neural networks to exploit the low-dimensional geometry arising from physical measurements, e.g. grids in images, sequences in time-series, or position and momentum in molecules, and their associated symmetries, such as translation or rotation. Throughout our exposition, we will describe these models, as well as many others, as natural instances of the same underlying principle of geometric regularity.

Such a ‘geometric unification’ endeavour in the spirit of the Erlangen Program serves a dual purpose: on one hand, it provides a common mathematical framework to study the most successful neural network architectures, such as CNNs, RNNs, GNNs, and Transformers. On the other, it gives a constructive procedure to incorporate prior physical knowledge into neural architectures and provide principled way to build future architectures yet to be invented.

Before proceeding, it is worth noting that our work concerns representation learning architectures and exploiting the symmetries of data therein. The many exciting pipelines where such representations may be used (such as self-supervised learning, generative modelling, or reinforcement learning) are not our central focusThe same applies for techniques used for optimising or regularising our architectures, such as Adam (?), dropout (?) or batch normalisation (?).. Hence, we will not review in depth influential neural pipelines such as variational autoencoders (?), generative adversarial networks (?), normalising flows (?), deep Q-networks (?), proximal policy optimisation (?), or deep mutual information maximisation (?). That being said, we believe that the principles we will focus on are of significant importance in all of these areas.

Further, while we have attempted to cast a reasonably wide net in order to illustrate the power of our geometric blueprint, our work does not attempt to accurately summarise the entire existing wealth of research on Geometric Deep Learning. Rather, we study several well-known architectures in-depth in order to demonstrate the principles and ground them in existing research, with the hope that we have left sufficient references for the reader to meaningfully apply these principles to any future geometric deep architecture they encounter or devise.

2 Learning in High Dimensions

Supervised machine learning, in its simplest formalisation, considers a set of \(N\) observations \(\gD =\{(x_i, y_i)\}_{i=1}^{ N}\) drawn i.i.d. from an underlying data distribution \(P\) defined over \(\gX \times \gY \), where \(\gX \) and \(\gY \) are respectively the data and the label domains. The defining feature in this setup is that \(\gX \) is a high-dimensional space: one typically assumes \(\gX = \R ^d\) to be a Euclidean space of large dimension \(d\).

Let us further assume that the labels \(y\) are generated by an unknown function \(f\), such that \(y_i = f(x_i)\), and the learning problem reduces to estimating the function \(f\) using a parametrised function class \(\gF =\{ f_{\thetab \in \Theta }\}\). Neural networks are a common realisation of such parametric function classes, in which case \(\thetab \in \Theta \) corresponds to the network weights. In this idealised setup, there is no noise in the labels, and modern deep learning systems typically operate in the so-called interpolating regime, where the estimated \(\tilde {f} \in \gF \) satisfies \(\tilde {f}(x_i) = f(x_i)\) for all \(i=1,\hdots , N\). The performance of a learning algorithm is measured in terms of the expected performance Statistical learning theory is concerned with more refined notions of generalisation based on concentration inequalities; we will review some of these in future work. on new samples drawn from \(P\), using some loss \(L(\cdot ,\cdot )\)

\[\gR (\tilde {f}):= \mathbb {E}_{P}\,\, L(\tilde {f}(x), f(x)),\]

with the squared-loss \(L(y,y')=\frac {1}{2}|y-y'|^2\) being among the most commonly used ones.

A successful learning scheme thus needs to encode the appropriate notion of regularity or inductive bias for \(f\), imposed through the construction of the function class \(\mathcal {F}\) and the use of regularisation. We briefly introduce this concept in the following section.

2.1 Inductive Bias via Function Regularity

Modern machine learning operates with large, high-quality datasets, which, together with appropriate computational resources, motivate the design of rich function classes \(\gF \) with the capacity to interpolate such large data. This mindset plays well

with neural networks, since even the simplest choices of architecture yields a dense class of functions.A set \(\mathcal {A}\subset \mathcal {X}\) is said to be dense in \(\mathcal {X}\) if its closure

\[ \mathcal {A}\cup \{ \displaystyle \lim _{i\rightarrow \infty } a_i : a_i \in \mathcal {A}\} = \mathcal {X}. \]

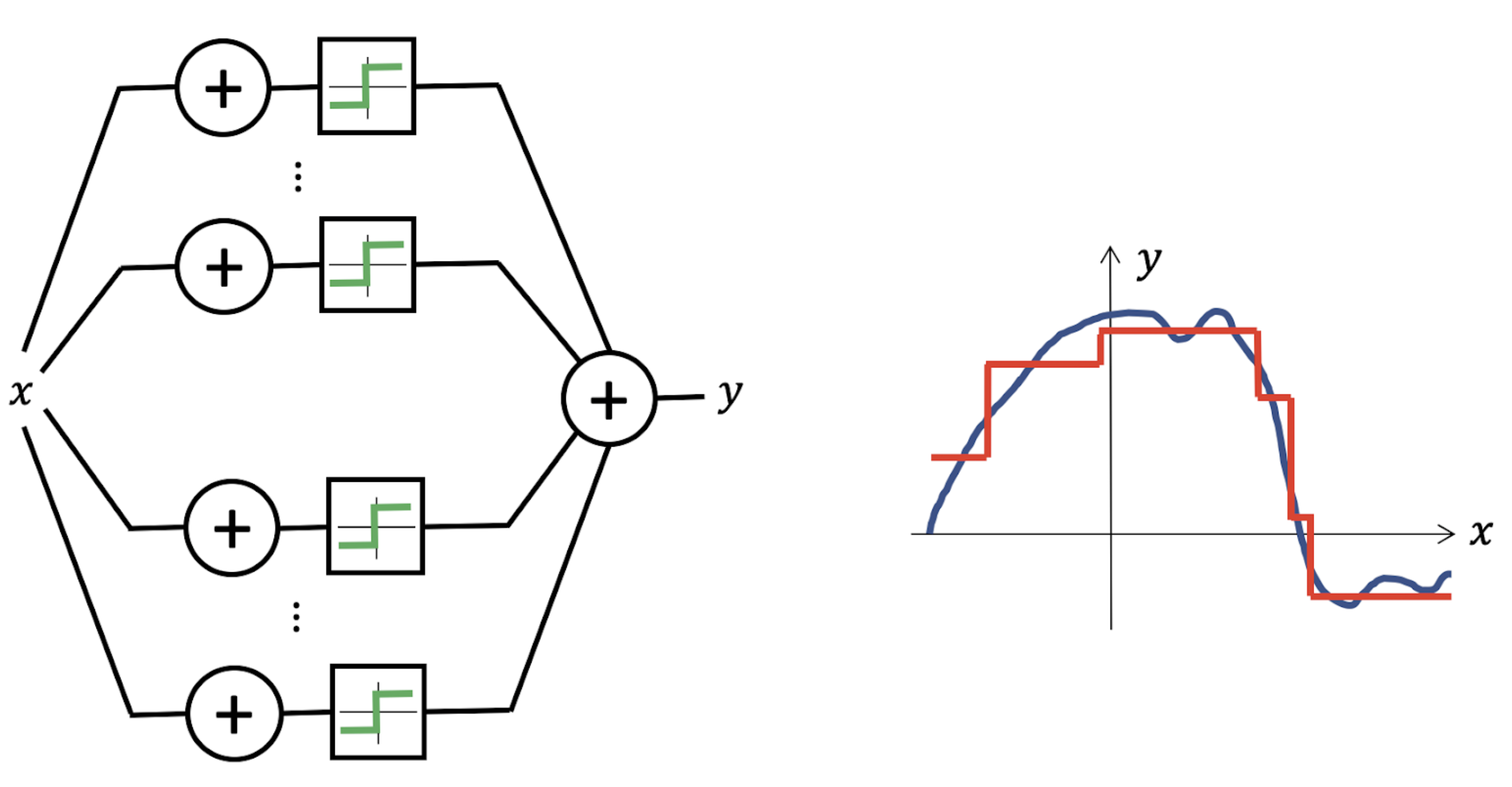

This implies that any point in \(\mathcal {X}\) is arbitrarily close to a point in \(\mathcal {A}\). A typical Universal Approximation result shows that the class of functions represented e.g. by a two-layer perceptron, \(f(\mathbf {x}) = \mathbf

{c}^\top \mathrm {sign}(\mathbf {A}\mathbf {x}+\mathbf {b})\) is dense in the space of continuous functions on \(\mathbb {R}^d\). The capacity to approximate almost arbitrary functions is the subject of various Universal

Approximation Theorems; several such results were proved and popularised in the 1990s by applied mathematicians and computer scientists (see e.g. ??????).

Universal Approximation, however, does not imply an absence of inductive bias. Given a hypothesis space \(\gF \) with universal approximation, we can define a complexity measure \(c: \gF \to \R _{+}\) and redefine our interpolation problem as

\[ \tilde {f} \in \arg \min _{g \in \gF } c(g) \quad \mathrm {s.t.} \quad g(x_i) = f(x_i) \quad \mathrm {for} \,\,\, i=1, \hdots , N, \]

i.e., we are looking for the most regular functions within our hypothesis class. For standard function spaces, this complexity measure can be defined as a norm, Informally, a norm \(\|x\|\) can be regarded as a “length” of vector \(x\). A Banach space is a complete vector space equipped with a norm. making \(\gF \) a Banach space and allowing to leverage a plethora of theoretical results in functional analysis. In low dimensions, splines are a workhorse for function approximation. They can be formulated as above, with a norm capturing the classical notion of smoothness, such as the squared-norm of second-derivatives \(\int _{-\infty }^{+\infty } |f''(x)|^2 \mathrm {d}x\) for cubic splines.

In the case of neural networks, the complexity measure \(c\) can be expressed in terms of the network weights, i.e. \(c(f_{\boldsymbol {\theta }}) = {c}(\boldsymbol {\theta })\). The \(L_2\)-norm of the network weights, known as weight decay, or the so-called path-norm (?) are popular choices in deep learning literature. From a Bayesian perspective, such complexity measures can also be interpreted as the negative log of the prior for the function of interest. More generally, this complexity can be enforced explicitly by incorporating it into the empirical loss (resulting in the so-called Structural Risk Minimisation), or implicitly, as a result of a certain optimisation scheme. For example, it is well-known that gradient-descent on an under-determined least-squares objective will choose interpolating solutions with minimal \(L_2\) norm. The extension of such implicit regularisation results to modern neural networks is the subject of current studies (see e.g. ????). All in all, a natural question arises: how to define effective priors that capture the expected regularities and complexities of real-world prediction tasks?

2.2 The Curse of Dimensionality

While interpolation in low-dimensions (with \(d=1,2\) or \(3\)) is a classic signal processing task with very precise mathematical control of estimation errors using increasingly sophisticated regularity classes (such as spline interpolants, wavelets, curvelets, or ridgelets), the situation for high-dimensional problems is entirely different.

In order to convey the essence of the idea, let us consider a classical notion of regularity that can be easily extended to high dimensions: 1-Lipschitz- functions \(f:\mathcal {X} \to \R \), i.e. functions satisfying \(|f(x) - f(x')| \leq \|x - x'\|\) for all \(x, x' \in \mathcal {X}\). This hypothesis only asks the target function to be locally smooth, i.e., if we perturb the input \(x\) slightly (as measured by the norm \(\|x - x'\|\)), the output \(f(x)\) is not allowed to change much. If our only knowledge of the target function \(f\) is that it is \(1\)-Lipschitz, how many observations do we expect to require to ensure that our estimate \(\tilde {f}\) will be close to \(f\)? Figure 2 reveals that the general answer is necessarily exponential in the dimension \(d\), signaling that the Lipschitz class grows ‘too quickly’ as the input dimension increases: in many applications with even modest dimension \(d\), the number of samples would be bigger than the number of atoms in the universe. The situation is not better if one replaces the Lipschitz class by a global smoothness hypothesis, such as the Sobolev Class \(\gH ^{s}(\Omega _d)\)A function \(f\) is in the Sobolev class \(\gH ^{s}(\Omega _d)\) if \(f \in L^2(\Omega _d)\) and the generalised \(s\)-th order derivative is square-integrable: \(\int |\omega |^{2s+1} |\hat {f}(\omega )|^2 d\omega < \infty \), where \(\hat {f}\) is the Fourier transform of \(f\); see Section 4.2. . Indeed, classic results (?) establish a minimax rate of approximation and learning for the Sobolev class of the order \(\epsilon ^{-d/s}\), showing that the extra smoothness assumptions on \(f\) only improve the statistical picture when \(s \propto d\), an unrealistic assumption in practice.

Figure 2: We consider a Lipschitz function \(f(x) = \sum _{j=1}^{2^d} z_j \phi (x-x_j)\) where \(z_j=\pm 1\), \(x_j \in \R ^d\) is placed in each quadrant, and \(\phi \) a locally supported Lipschitz ‘bump’. Unless we observe the function in most of the \(2^d\) quadrants, we will incur in a constant error in predicting it. This simple geometric argument can be formalised through the notion of Maximum Discrepancy (?), defined for the Lipschitz class as \(\kappa (d)=\mathbb {E}_{x,x'} \sup _{f \in \mathrm {Lip}(1)} \left | \frac {1}{N}\sum _{l} f(x_l) - \frac {1}{N}\sum _{l} f(x'_l) \right | \simeq N^{-1/d}\), which measures the largest expected discrepancy between two independent \(N\)-sample expectations. Ensuring that \(\kappa (d) \simeq \epsilon \) requires \(N = \Theta (\epsilon ^{-d})\); the corresponding sample \(\{x_l\}_l\) defines an \(\epsilon \)-net of the domain. For a \(d\)-dimensional Euclidean domain of diameter \(1\), its size grows exponentially as \(\epsilon ^{-d}\).

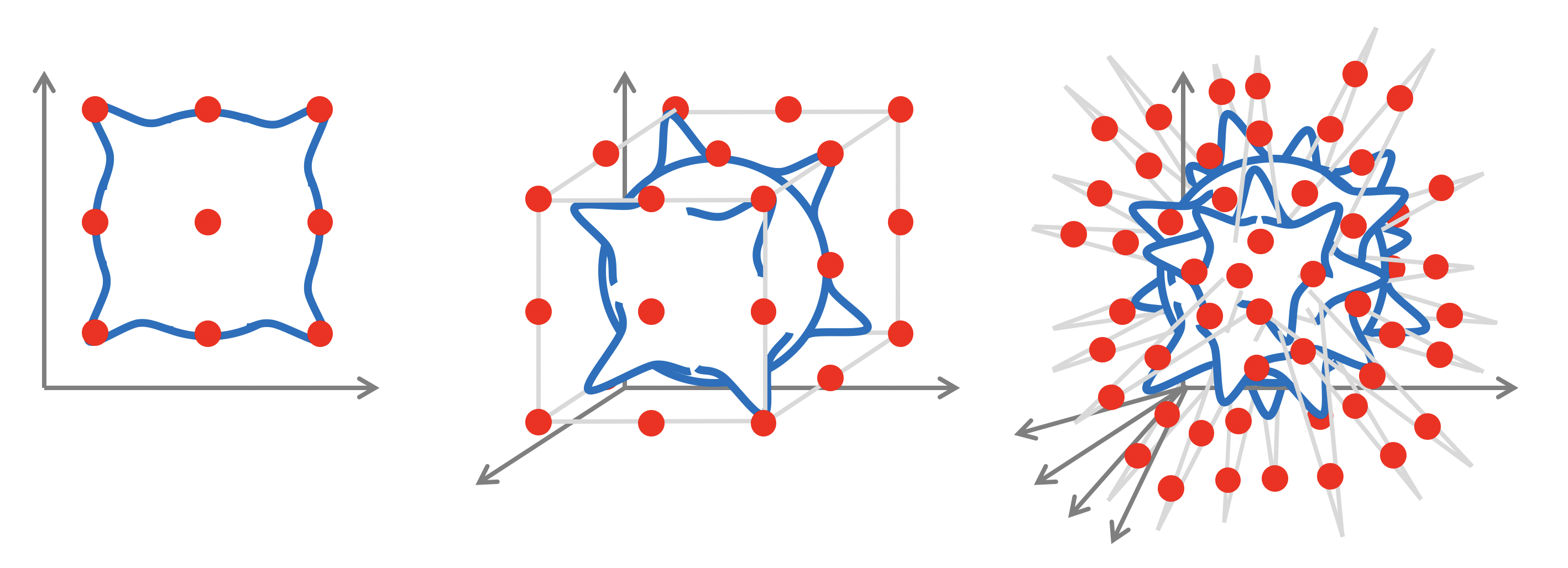

Fully-connected neural networks define function spaces that enable more flexible notions of regularity, obtained by considering complexity functions \(c\) on their weights. In particular, by choosing a sparsity-promoting regularisation, they have the ability to break this curse of dimensionality (?). However, this comes at the expense of making strong assumptions on the nature of the target function \(f\), such as that \(f\) depends on a collection of low-dimensional projections of the input (see Figure 3). In most real-world applications (such as computer vision, speech analysis, physics, or chemistry), functions of interest tend to exhibits complex long-range correlations that cannot be expressed with low-dimensional projections (Figure 3), making this hypothesis unrealistic. It is thus necessary to define an alternative source of regularity, by exploiting the spatial structure of the physical domain and the geometric priors of \(f\), as we describe in the next Section 3.

Figure 3: If the unknown function \(f\) is presumed to be well approximated as \(f(\mathbf {x}) \approx g(\mathbf {A}\mathbf {x})\) for some unknown \(\mathbf {A} \in \mathbb {R}^{k \times d}\) with \(k \ll d\), then shallow neural networks can capture this inductive bias, see e.g. ?. In typical applications, such dependency on low-dimensional projections is unrealistic, as illustrated in this example: a low-pass filter projects the input images to a low-dimensional subspace; while it conveys most of the semantics, substantial information is lost.

3 Geometric Priors

Modern data analysis is synonymous with high-dimensional learning. While the simple arguments of Section 2.1 reveal the impossibility of learning from generic high-dimensional data as a result of the curse of dimensionality, there is hope for physically-structured data, where we can employ two fundamental principles: symmetry and scale separation. In the settings considered in this text, this additional structure will usually come from the structure of the domain underlying the input signals: we will assume that our machine learning system operates on signals (functions) on some domain \(\Omega \). While in many cases linear combinations of points on \(\Omega \) is not well-defined\(\Omega \) must be a vector space in order for an expression \(\alpha u + \beta v\) to make sense., we can linearly combine signals on it, i.e., the space of signals forms a vector space. Moreover, since we can define an inner product between signals, this space is a Hilbert space.

The space of \(\mathcal {C}\)-valued signals on \(\Omega \) When \(\Omega \) has some additional structure, we may further restrict the kinds of signals in \(\mathcal {X}(\Omega , \mathcal {C})\). For example, when \(\Omega \) is a smooth manifold, we may require the signals to be smooth. Whenever possible, we will omit the range \(\mathcal {C}\) for brevity. (for \(\Omega \) a set, possibly with additional structure, and \(\mathcal {C}\) a vector space, whose dimensions are called channels)

\(\seteqnumber{0}{}{0}\)\begin{equation} \mathcal {X}(\Omega , \mathcal {C}) = \{ x : \Omega \rightarrow \mathcal {C} \} \end{equation}

is a function space that has a vector space structure. Addition and scalar multiplication of signals is defined as:

\(\seteqnumber{0}{}{1}\)\begin{equation*} (\alpha x + \beta y)(u) = \alpha x(u) + \beta y(u) \quad \text {for all} \quad u\in \Omega , \end{equation*}

with real scalars \(\alpha , \beta \). Given an inner product \(\langle v, w \rangle _\mathcal {C}\) on \(\mathcal {C}\) and a measureWhen the domain \(\Omega \) is discrete, \(\mu \) can be chosen as the counting measure, in which case the integral becomes a sum. In the following, we will omit the measure and use \(\mathrm {d}u\) for brevity. \(\mu \) on \(\Omega \) (with respect to which we can define an integral), we can define an inner product on \(\mathcal {X}(\Omega , \mathcal {C})\) as

\(\seteqnumber{0}{}{1}\)\begin{equation} \langle x, y \rangle = \int _{\Omega } \langle x(u), \, y(u) \rangle _{\mathcal {C}} \; \mathrm {d}\mu (u). \label {eqn:innerprod} \end{equation}

As a typical illustration, take \(\Omega = \mathbb {Z}_n\times \mathbb {Z}_n\) to be a two-dimensional \(n\times n\) grid, \(x\) an RGB image (i.e. a signal \(x : \Omega \rightarrow \R ^3\)), and \(f\) a function (such as a single-layer Perceptron) operating on \(3n^2\)-dimensional inputs. As we will see in the following with greater detail, the domain \(\Omega \) is usually endowed with certain geometric structure and symmetries. Scale separation results from our ability to preserve important characteristics of the signal when transferring it onto a coarser version of the domain (in our example, subsampling the image by coarsening the underlying grid).

We will show that both principles, to which we will generically refer as geometric priors, are prominent in most modern deep learning architectures. In the case of images considered above, geometric priors are built into Convolutional Neural Networks (CNNs) in the form of convolutional filters with shared weights (exploiting translational symmetry) and pooling (exploiting scale separation). Extending these ideas to other domains such as graphs and manifolds and showing how geometric priors emerge from fundamental principles is the main goal of Geometric Deep Learning and the leitmotif of our text.

3.1 Symmetries, Representations, and Invariance

Informally, a symmetry of an object or system is a transformation that leaves a certain property of said object or system unchanged or invariant. Such transformations may be either smooth, continuous, or discrete. Symmetries are ubiquitous in many machine learning tasks. For example, in computer vision the object category is unchanged by shifts, so shifts are symmetries in the problem of visual object classification. In computational chemistry, the task of predicting properties of molecules independently of their orientation in space requires rotational invariance. Discrete symmetries emerge naturally when describing particle systems where particles do not have canonical ordering and thus can be arbitrarily permuted, as well as in many dynamical systems, via the time-reversal symmetry (such as systems in detailed balance or the Newton’s second law of motion). As we will see in Section 4.1, permutation symmetries are also central to the analysis of graph-structured data.

Symmetry groups The set of symmetries of an object satisfies a number of properties. First, symmetries may be combined to obtain new symmetries: if \(\fg \) and \(\fh \) are two symmetries, then their compositions \(\fg \circ \fh \) and \(\fh \circ \fg \) We will follow the juxtaposition notation convention used in group theory, \(\fg \circ \fh = \fg \fh \), which should be read right-to-left: we first apply \(\fh \) and then \(\fg \). The order is important, as in many cases symmetries are non-commutative. Readers familiar with Lie groups might be disturbed by our choice to use the Fraktur font to denote group elements, as it is a common notation of Lie algebras. are also symmetries. The reason is that if both transformations leave the object invariant, then so does the composition of transformations, and hence the composition is also a symmetry. Furthermore, symmetries are always invertible, and the inverse is also a symmetry. This shows that the collection of all symmetries form an algebraic object known as a group. Since these objects will be a centerpiece of the mathematical model of Geometric Deep Learning, they deserve a formal definition and detailed discussion:

A group is a set \(\fG \) along with a binary operation \(\circ : \fG \times \fG \rightarrow \fG \) called composition (for brevity, denoted by juxtaposition \(\fg \circ \fh = \fg \fh \)) satisfying the following axioms:

Associativity: \((\fg \fh ) \fk = \fg (\fh \fk )\) for all \(\fg , \fh , \fk \in \fG \).

Identity: there exists a unique \(\fe \in \fG \) satisfying \(\fe \fg = \fg \fe = \fg \) for all \(\fg \in \fG \).

Inverse: For each \(\fg \in \fG \) there is a unique inverse \(\fg ^{-1} \in \fG \) such that \(\fg \fg ^{-1} = \fg ^{-1} \fg = \fe \).

Closure: The group is closed under composition, i.e., for every \(\fg , \fh \in \fG \), we have \(\fg \fh \ \in \fG \).

Note that commutativity is not part of this definition, i.e. we may have \(\fg \fh \neq \fh \fg \). Groups for which \(\fg \fh = \fh \fg \) for all \(\fg , \fh \in \fG \) are called commutative or AbelianAfter the Norwegian mathematician Niels Henrik Abel (1802–1829)..

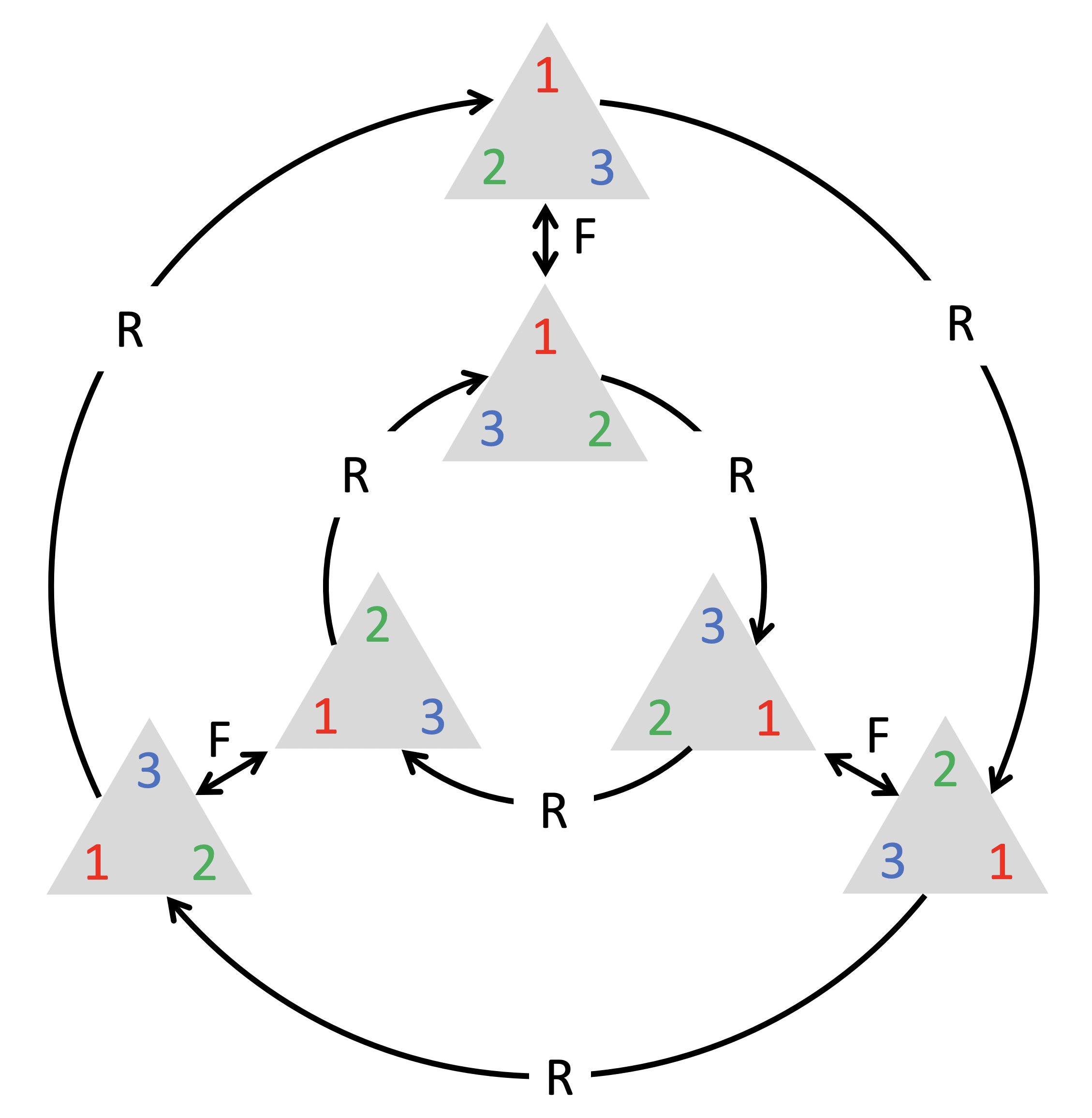

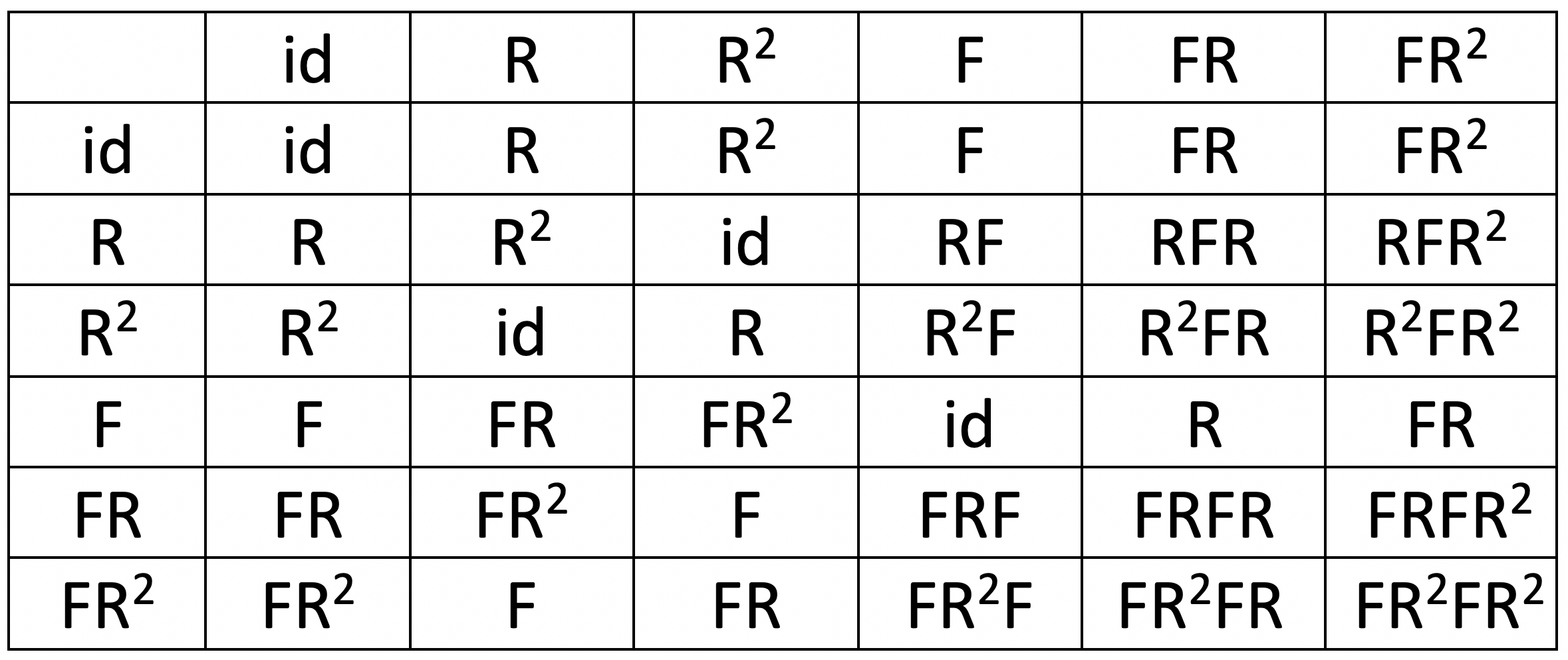

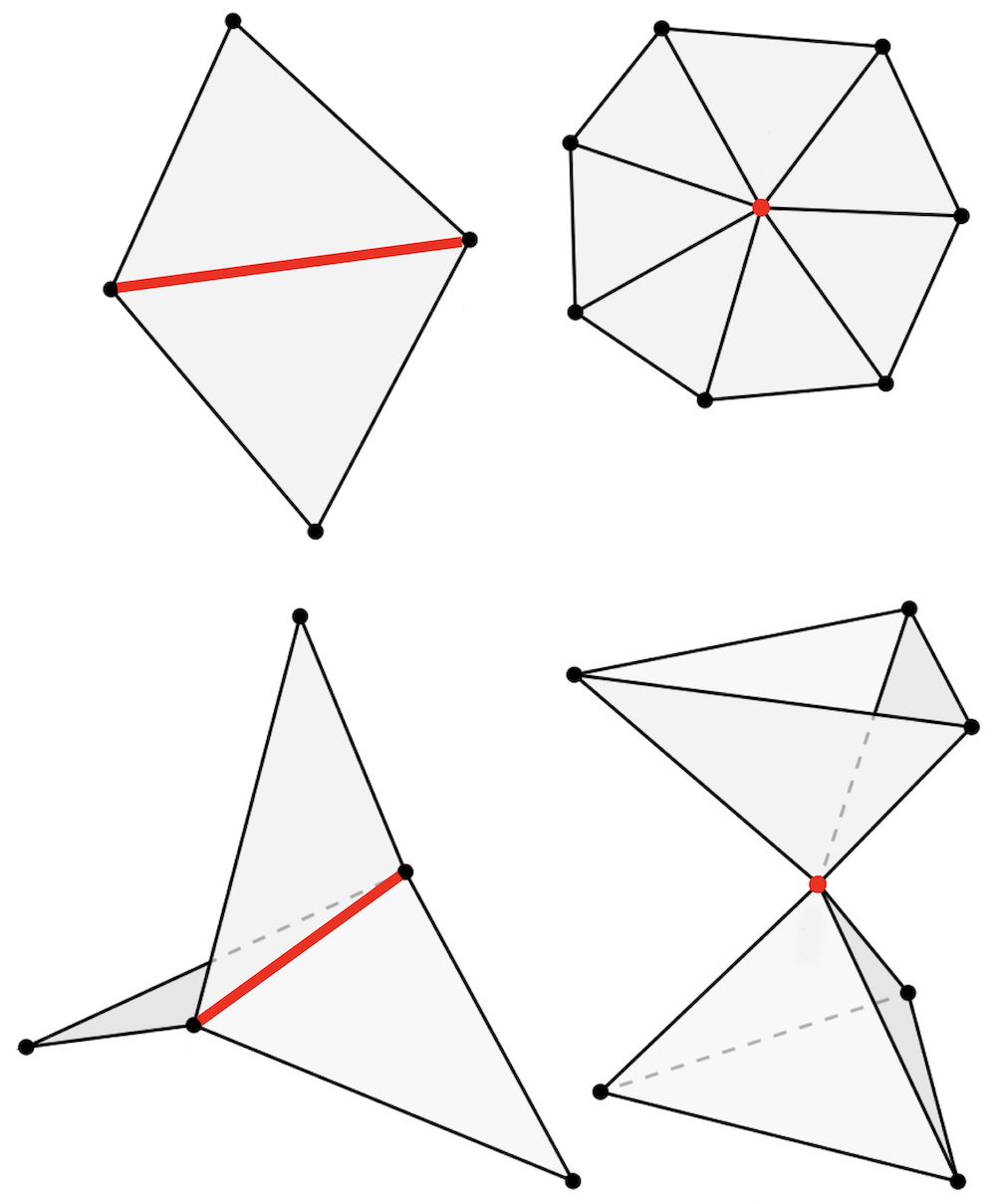

Though some groups can be very large and even infinite, they often arise from compositions of just a few elements, called group generators. Formally, \(\mathfrak {G}\) is said to be generated by a subset \(S \subseteq \mathfrak {G}\) (called the group generator) if every element \(\fg \in \fG \) can be written as a finite composition of the elements of \(S\) and their inverses. For instance, the symmetry group of an equilateral triangle (dihedral group \(\mathrm {D}_3\)) is generated by a \(60^\circ \) rotation and a reflection (Figure 4). The 1D translation group, which we will discuss in detail in the following, is generated by infinitesimal displacements; this is an example of a Lie group of differentiable symmetries.Lie groups have a differentiable manifold structure. One such example that we will study in Section 4.3 is the special orthogonal group \(\mathrm {SO}(3)\), which is a 3-dimensional manifold.

Note that here we have defined a group as an abstract object, without saying what the group elements are (e.g. transformations of some domain), only how they compose. Hence, very different kinds of objects may have the same symmetry group. For instance, the aforementioned group of rotational and reflection symmetries of a triangle is the same as the group of permutations of a sequence of three elements (we can permute the corners in the triangle in any way using a rotation and reflection – see Figure 4)The diagram shown in Figure 4 (where each node is associated with a group element, and each arrow with a generator), is known as the Cayley diagram..

Figure 4: Left: an equilateral triangle with corners labelled by \(1, 2, 3\), and all possible rotations and reflections of the triangle. The group \(\mathrm {D}_3\) of rotation/reflection symmetries of the triangle is generated by only two elements (rotation by \(60^\circ \) R and reflection F) and is the same as the group \(\Sigma _3\) of permutations of three elements. Right: the multiplication table of the group \(\mathrm {D}_3\). The element in the row \(\fg \) and column \(\fh \) corresponds to the element \(\fg \fh \).

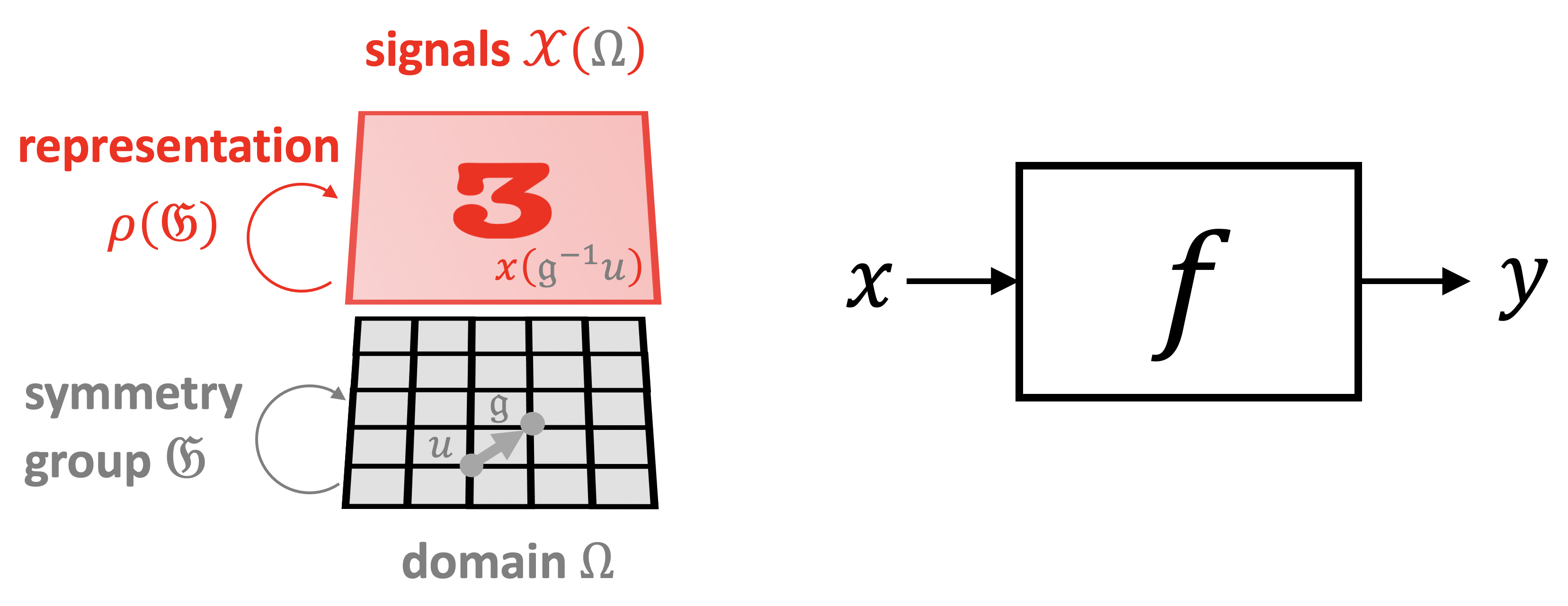

Group Actions and Group Representations Rather than considering groups as abstract entities, we are mostly interested in how groups act on data. Since we assumed that there is some domain \(\Omega \) underlying our data, we will study how the group acts on \(\Omega \) (e.g. translation of points of the plane), and from there obtain actions of the same group on the space of signals \(\mathcal {X}(\Omega )\) (e.g. translations of planar images and feature maps).

A group action Technically, what we define here is a left group action. of \(\fG \) on a set \(\Omega \) is defined as a mapping \((\fg , u) \mapsto \fg .u\) associating a group element \(\fg \in \fG \) and a point \(u\in \Omega \) with some other point on \(\Omega \) in a way that is compatible with the group operations, i.e., \(\fg .(\fh .u) = (\fg \fh ).u\) for all \(\fg , \fh \in \fG \) and \(u \in \Omega \). We shall see numerous instances of group actions in the following sections. For example, in the plane the Euclidean group \(\mathrm {E}(2)\) is the group of transformations of \(\R ^2\) that preserves Euclidean distancesDistance-preserving transformations are called isometries. According to Klein’s Erlangen Programme, the classical Euclidean geometry arises from this group., and consists of translations, rotations, and reflections. The same group, however, can also act on the space of images on the plane (by translating, rotating and flipping the grid of pixels), as well as on the representation spaces learned by a neural network. More precisely, if we have a group \(\fG \) acting on \(\Omega \), we automatically obtain an action of \(\fG \) on the space \(\mathcal {X}(\Omega )\):

\(\seteqnumber{0}{}{2}\)\begin{equation} (\fg . x)(u) = x(\fg ^{-1} u). \label {eq:group_action} \end{equation}

Due to the inverse on \(\fg \), this is indeed a valid group action, in that we have \((\fg . (\fh . x))(u) = ((\fg \fh ) . x)(u)\).

The most important kind of group actions, which we will encounter repeatedly throughout this text, are linear group actions, also known as group representations. The action on signals in equation (3) is indeed linear, in the sense that

\[ \fg . (\alpha x + \beta x') = \alpha (\fg . x) + \beta (\fg . x') \]

for any scalars \(\alpha , \beta \) and signals \(x, x' \in \mathcal {X}(\Omega )\). We can describe linear actions either as maps \((\fg , x) \mapsto \fg .x\) that are linear in \(x\), or equivalently, by currying, as a map \(\rho : \fG \rightarrow \R ^{n \times n}\)When \(\Omega \) is infinte, the space of signals \(\mathcal {X}(\Omega )\) is infinite dimensional, in which case \(\rho (\fg )\) is a linear operator on this space, rather than a finite dimensional matrix. In practice, one must always discretise to a finite grid, though. that assigns to each group element \(\fg \) an (invertible) matrix \(\rho (\fg )\). The dimension \(n\) of the matrix is in general arbitrary and not necessarily related to the dimensionality of the group or the dimensionality of \(\Omega \), but in applications to deep learning \(n\) will usually be the dimensionality of the feature space on which the group acts. For instance, we may have the group of 2D translations acting on a space of images with \(n\) pixels.

As with a general group action, the assignment of matrices to group elements should be compatible with the group action. More specifically, the matrix representing a composite group element \(\fg \fh \) should equal the matrix product of the representation of \(\fg \) and \(\fh \):

A \(n\)-dimensional real representation of a group \(\fG \) is a map \(\rho : \fG \rightarrow \R ^{n \times n}\), assigning to each \(\fg \in \fG \) an invertible matrix \(\rho (\fg )\), and satisfying the condition \(\rho (\fg \fh ) = \rho (\fg ) \rho (\fh )\) for all \(\fg , \fh \in \fG \). Similarly, a complex representation is a map \(\rho : \fG \rightarrow \mathbb {C}^{n \times n}\) satisfying the same equation. A representation is called unitary or orthogonal if the matrix \(\rho (\fg )\) is unitary or orthogonal for all \(\fg \in \fG \).

Written in the language of group representations, the action of \(\fG \) on signals \(x \in \mathcal {X}(\Omega )\) is defined as \(\rho (\fg ) x(u) = x(\fg ^{-1} u)\). We again verify that

\[ (\rho (\fg ) (\rho (\fh ) x))(u) = (\rho (\fg \fh ) x)(u). \]

Figure 5: Three spaces of interest in Geometric Deep Learning: the (physical) domain \(\Omega \), the space of signals \(\mathcal {X}(\Omega )\), and the hypothesis class \(\mathcal {F}(\mathcal {X}(\Omega ))\). Symmetries of the domain \(\Omega \) (captured by the group \(\fG \)) act on signals \(x\in \mathcal {X}(\Omega )\) through group representations \(\rho (\fg )\), imposing structure on the functions \(f\in \mathcal {F}(\mathcal {X}(\Omega ))\) acting on such signals.

Invariant and Equivariant functions

The symmetry of the domain \(\Omega \) underlying the signals \(\mathcal {X}(\Omega )\) imposes structure on the function \(f\) defined on such signals. It turns out to be a powerful inductive bias, improving learningIn general, \(f\) depends both on the signal an the domain, i.e., \(\mathcal {F}(\mathcal {X}(\Omega ), \Omega )\). We will often omit the latter dependency for brevity. efficiency by reducing the space of possible interpolants, \(\mathcal {F}(\mathcal {X}(\Omega ))\), to those which satisfy the symmetry priors. Two important cases we will be exploring in this text are invariant and equivariant functions.

A function \(f: \mathcal {X}(\Omega ) \rightarrow \mathcal {Y}\) is \(\fG \)-invariant if \(f(\rho (\fg )x) = f(x)\) for all \(\fg \in \fG \) and \(x \in \mathcal {X}(\Omega )\), i.e., its output is unaffected by the group action on the input.

A classical example of invariance is shift-invariance,Note that signal processing books routinely use the term ‘shift-invariance’ referring to shift-equivariance, e.g. Linear Shift-invariant Systems. arising in computer vision and pattern recognition applications such as image classification. The function \(f\) in this case (typically implemented as a Convolutional Neural Network) inputs an image and outputs the probability of the image to contain an object from a certain class (e.g. cat or dog). It is often reasonably assumed that the classification result should not be affected by the position of the object in the image, i.e., the function \(f\) must be shift-invariant. Multi-layer Perceptrons, which can approximate any smooth function, do not have this property – one of the reasons why early attempts to apply these architectures to problems of pattern recognition in the 1970s failed. The development of neural network architectures with local weight sharing, as epitomised by Convolutional Neural Networks, was, among other reasons, motivated by the need for shift-invariant object classification.

If we however take a closer look at the convolutional layers of CNNs, we will find that they are not shift-invariant but shift-equivariant: in other words, a shift of the input to a convolutional layer produces a shift in the output feature maps by the same amount.

A function \(f: \mathcal {X}(\Omega ) \rightarrow \mathcal {X}(\Omega )\) is \(\fG \)-equivariant if More generally, we might have \(f: \mathcal {X}(\Omega ) \rightarrow \mathcal {X}(\Omega ')\) with input and output spaces having different domains \(\Omega , \Omega '\) and representations \(\rho \), \(\rho '\) of the same group \(\fG \). In this case, equivariance is defined as \(f(\rho (\fg )x) = \rho '(\fg ) f(x)\). \(f(\rho (\fg )x) = \rho (\fg ) f(x)\) for all \(\fg \in \fG \), i.e., group action on the input affects the output in the same way.

Resorting again to computer vision, a prototypical application requiring shift-equivariance is image segmentation, where the output of \(f\) is a pixel-wise image mask. Obviously, the segmentation mask must follow shifts in the input image. In this example, the domains of the input and output are the same, but since the input has three color channels while the output has one channel per class, the representations \((\rho , \mathcal {X}(\Omega , \mathcal {C}))\) and \((\rho ', \mathcal {X}(\Omega , \mathcal {C}'))\) are somewhat different.

However, even the previous use case of image classification is usually implemented as a sequence of convolutional (shift-equivariant) layers, followed by global pooling (which is shift-invariant). As we will see in Section 3.5, this is a general blueprint of a majority of deep learning architectures, including CNNs and Graph Neural Networks (GNNs).

3.2 Isomorphisms and Automorphisms

Subgroups and Levels of structure

As mentioned before, a symmetryInvertible and structure-preserving maps between different objects often go under the generic name of isomorphisms (Greek for ‘equal shape’). An isomorphism from an object to itself is called an automorphism, or symmetry. is a transformation that preserves some property or structure, and the set of all such transformations for a given structure forms a symmetry group. It happens often that there is not one but multiple structures of interest, and so we can consider several levels of structure on our domain \(\Omega \). Hence, what counts as a symmetry depends on the structure under consideration, but in all cases a symmetry is an invertible map that respects this structure.

On the most basic level, the domain \(\Omega \) is a set, which has a minimal amount of structure: all we can say is that the set has some cardinalityFor a finite set, the cardinality is the number of elements (‘size’) of the set, and for infinite sets the cardinality indicates different kinds of infinities, such as the countable infinity of the natural numbers, or the uncountable infinity of the continuum \(\R \).. Self-maps that preserve this structure are bijections (invertible maps), which we may consider as set-level symmetries. One can easily verify that this is a group by checking the axioms: a compositions of two bijections is also a bijection (closure), the associativity stems from the associativity of the function composition, the map \(\tau (u)=u\) is the identity element, and for every \(\tau \) the inverse exists by definition, satisfying \((\tau \circ \tau ^{-1})(u) = (\tau ^{-1} \circ \tau )(u) =u\).

Depending on the application, there may be further levels of structure. For instance, if \(\Omega \) is a topological space, we can consider maps that preserve continuity: such maps are called homeomorphisms and in addition to simple bijections between sets, are also continuous and have continuous inverse. Intuitively, continuous functions are well-behaved and map points in a neighbourhood (open set) around a point \(u\) to a neighbourhood around \(\tau (u)\).

One can further demand that the map and its inverse are (continuously) differentiable,Every differentiable function is continuous. If the map is continuously differentiable ‘sufficiently many times’, it is said to be smooth. i.e., the map and its inverse have a derivative at every point (and the derivative is also continuous). This requires further differentiable structure that comes with differentiable manifolds, where such maps are called diffeomorphisms and denoted by \(\mathrm {Diff}(\Omega )\). Additional examples of structures we will encounter include distances or metrics (maps preserving them are called isometries) or orientation (to the best of our knowledge, orientation-preserving maps do not have a common Greek name).

A metric or distance is a function \(d:\Omega \times \Omega \rightarrow [0,\infty )\) satisfying for all \(u,v,w \in \Omega \):

Identity of indiscernibles: \(d(u,v) =0\) iff \(u=v\).

Symmetry: \(d(u,v) = d(v,u)\).

Triangle inequality: \(d(u,v) \leq d(u,w) + d(w,v)\).

A space equipped with a metric \((\Omega ,d)\) is called a metric space.

The right level of structure to consider depends on the problem. For example, when segmenting histopathology slide images, we may wish to consider flipped versions of an image as equivalent (as the sample can be flipped when put under the microscope), but if we are trying to classify road signs, we would only want to consider orientation-preserving transformations as symmetries (since reflections could change the meaning of the sign).

As we add levels of structure to be preserved, the symmetry group will get smaller. Indeed, adding structure is equivalent to selecting a subgroup, which is a subset of the larger group that satisfies the axioms of a group by itself:

Let \((\fG ,\circ )\) be a group and \(\mathfrak {H} \subseteq \fG \) a subset. \(\mathfrak {H}\) is said to be a subgroup of \(\fG \) if \((\mathfrak {H},\circ )\) constitutes a group with the same operation.

For instance, the group of Euclidean isometries \(\E {2}\) is a subgroup of the group of planar diffeomorphisms \(\diff {2}\), and in turn the group of orientation-preserving isometries \(\SE {2}\) is a subgroup of \(\E {2}\). This hierarchy of structure follows the Erlangen Programme philosophy outlined in the Preface: in Klein’s construction, the Projective, Affine, and Euclidean geometries have increasingly more invariants and correspond to progressively smaller groups.

Isomorphisms and Automorphisms

We have described symmetries as structure preserving and invertible maps from an object to itself. Such maps are also known as automorphisms, and describe a way in which an object is equivalent it itself. However, an equally important class of maps are the so-called isomorphisms, which exhibit an equivalence between two non-identical objects. These concepts are often conflated, but distinguishing them is necessary to create clarity for our following discussion.

To understand the difference, consider a set \(\Omega = \{0,1,2\}\). An automorphism of the set \(\Omega \) is a bijection \(\tau : \Omega \rightarrow \Omega \) such as a cyclic shift \(\tau (u) = u + 1 \mod 3\). Such a map preserves the cardinality property, and maps \(\Omega \) onto itself. If we have another set \(\Omega ' = \{a, b, c\}\) with the same number of elements, then a bijection \(\eta : \Omega \rightarrow \Omega '\) such as \(\eta (0) = a\), \(\eta (1) = b\), \(\eta (2) = c\) is a set isomorphism.

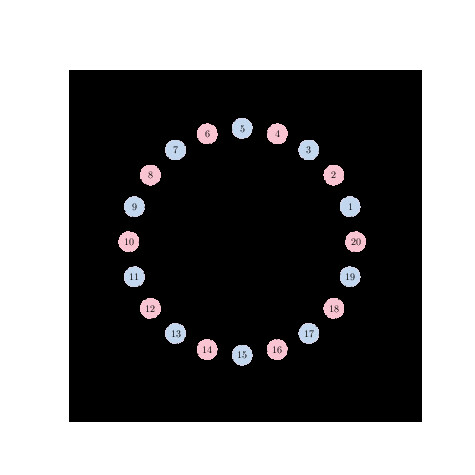

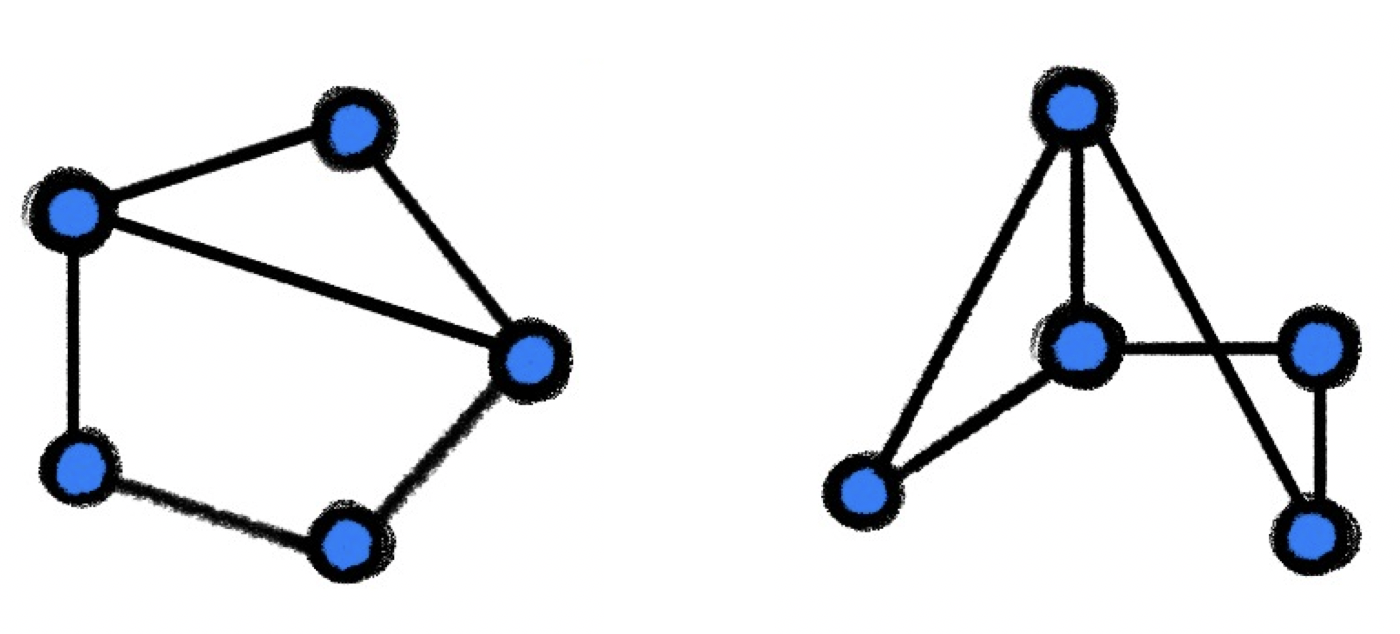

As we will see in Section 4.1 for graphs, the notion of structure includes not just the number of nodes, but also the connectivity. An isomorphism \(\eta : \mathcal {V} \rightarrow \mathcal

{V}'\) between two graphs \(\mathcal {G}=(\mathcal {V},\mathcal {E})\) and \(\mathcal {G}'=(\mathcal {V}',\mathcal {E}')\) is thus a bijection between the nodes that maps pairs of connected nodes to pairs of

connected nodes, and likewise for pairs of non-connected nodes.I.e., \((\eta (u),\eta (v)) \in \mathcal {V}'\) iff \((u,v) \in \mathcal {V}\). Two isomorphic graphs are thus structurally

identical, and differ only in the way their nodes are ordered.

The Folkman graph (?) is a beautiful example of a graph with 3840 automorphisms, exemplified by the many symmetric ways to draw it. On the other hand, a graph automorphism or symmetry is a map \(\tau : \mathcal {V}

\rightarrow \mathcal {V}\) maps the nodes of the graph back to itself, while preserving the connectivity. A graph with a non-trivial automorphism (i.e., \(\tau \neq \mathrm {id}\)) presents symmetries.

3.3 Deformation Stability

The symmetry formalism introduced in Sections 3.1–3.2 captures an idealised world where we know exactly which transformations are to be considered as symmetries, and we want to respect these symmetries exactly. For instance in computer vision, we might assume that planar translations are exact symmetries. However, the real world is noisy and this model falls short in two ways.

Two objects moving at different velocities in a video define a transformation outside the translation group. Firstly, while these simple groups provide a way to understand global symmetries of the domain \(\Omega \) (and by

extension, of signals on it, \(\gX (\Omega )\)), they do not capture local symmetries well. For instance, consider a video scene with several objects, each moving along its own different direction. At subsequent frames, the resulting scene will

contain approximately the same semantic information, yet no global translation explains the transformation from one frame to another. In other cases, such as a deformable 3D object viewed by a camera, it is simply very hard to describe the group of

transformations that preserve the object identity. These examples illustrate that in reality we are more interested in a far larger set of transformations where global, exact invariance is replaced by a local, inexact one. In our discussion, we will distinguish

between two scenarios: the setting where the domain \(\Omega \) is fixed, and signals \(x \in \gX (\Omega )\) are undergoing deformations, and the setting where the domain \(\Omega \) itself may be deformed.

Two objects moving at different velocities in a video define a transformation outside the translation group. Firstly, while these simple groups provide a way to understand global symmetries of the domain \(\Omega \) (and by

extension, of signals on it, \(\gX (\Omega )\)), they do not capture local symmetries well. For instance, consider a video scene with several objects, each moving along its own different direction. At subsequent frames, the resulting scene will

contain approximately the same semantic information, yet no global translation explains the transformation from one frame to another. In other cases, such as a deformable 3D object viewed by a camera, it is simply very hard to describe the group of

transformations that preserve the object identity. These examples illustrate that in reality we are more interested in a far larger set of transformations where global, exact invariance is replaced by a local, inexact one. In our discussion, we will distinguish

between two scenarios: the setting where the domain \(\Omega \) is fixed, and signals \(x \in \gX (\Omega )\) are undergoing deformations, and the setting where the domain \(\Omega \) itself may be deformed.

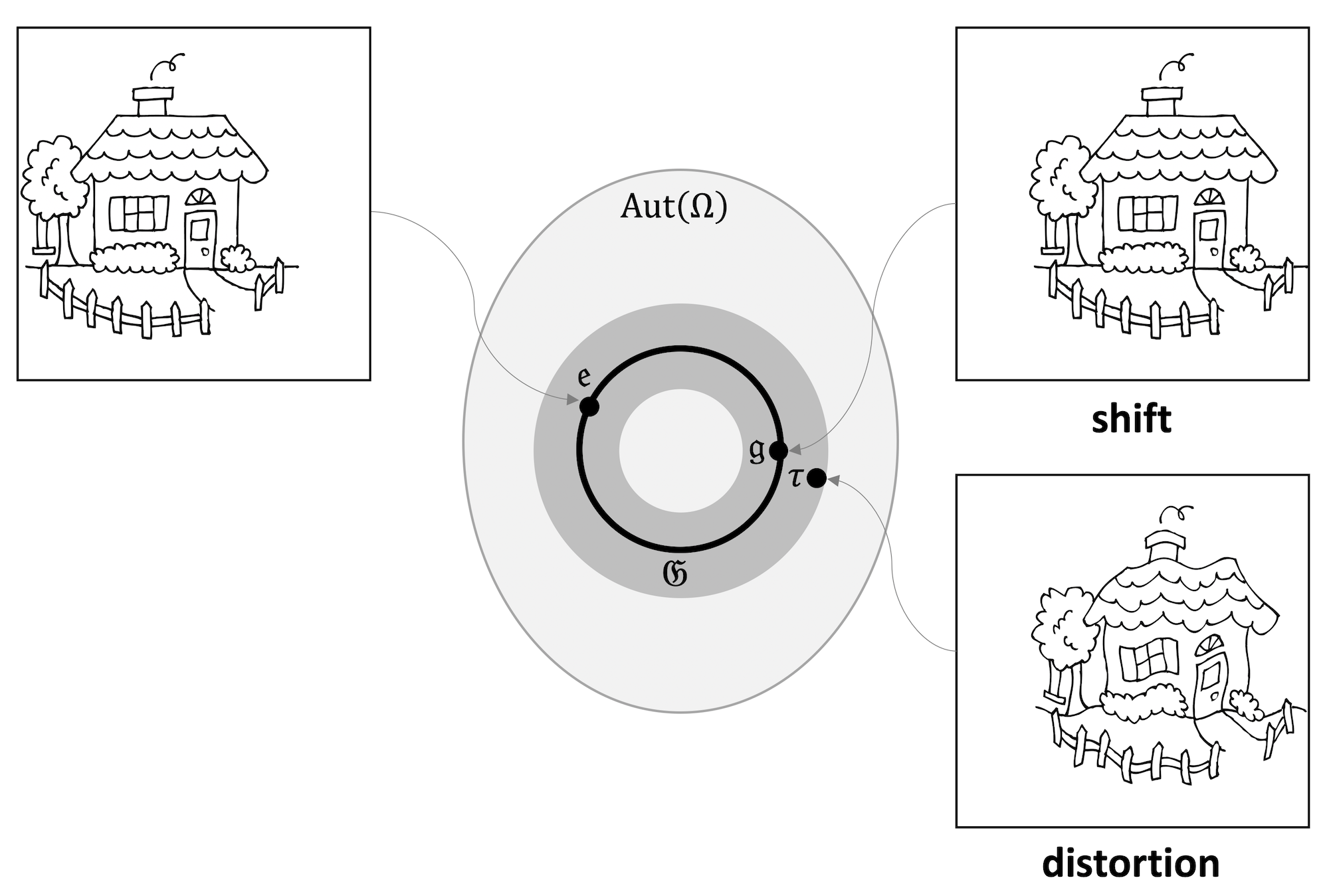

Stability to signal deformations In many applications, we know a priori that a small deformation of the signal \(x\) should not change the output of \(f(x)\), so it is tempting to consider such deformations as symmetries. For instance, we could view small diffeomorphisms \(\tau \in \diff {\Omega }\), or even small bijections, as symmetries. However, small deformations can be composed to form large deformations, so “small deformations” do not form a group,E.g., the composition of two \(\epsilon \)-isometries is a \(2\epsilon \)-isometry, violating the closure property. and we cannot ask for invariance or equivariance to small deformations only. Since large deformations can can actually materially change the semantic content of the input, it is not a good idea to use the full group \(\diff {\Omega }\) as symmetry group either.

A better approach is to quantify how “far” a given \(\tau \in \diff {\Omega }\) is from a given symmetry subgroup \(\fG \subset \diff {\Omega }\) (e.g. translations) with a complexity measure \(c(\tau )\), so that \(c(\tau ) = 0\) whenever \(\tau \in \fG \). We can now replace our previous definition of exact invariance and equivarance under group actions with a ‘softer’ notion of deformation stability (or approximate invariance):

\(\seteqnumber{0}{}{3}\)\begin{equation} \label {eq:defstability1} \| f(\rho (\tau ) x) - f(x)\| \leq C c(\tau ) \|x\|,~,~ \forall x\in \gX (\Omega ) \end{equation}

where \(\rho (\tau )x(u) = x(\tau ^{-1} u)\) as before, and where \(C\) is some constant independent of the signal \(x\). A function \(f\in \mathcal {F}(\mathcal {X}(\Omega ))\) satisfying the above equation is said to be geometrically stable. We will see examples of such functions in the next Section 3.4.

Since \(c(\tau )=0\) for \(\tau \in \fG \), this definition generalises the \(\fG \)-invariance property defined above. Its utility in applications depends on introducing an appropriate deformation cost. In the case of images defined over a continuous Euclidean plane, a popular choice is \(c^2(\tau ) := \int _\Omega \| \nabla \tau (u)\|^2 \mathrm {d}u\), which measures the ‘elasticity’ of \(\tau \), i.e., how different it is from the displacement by a constant vector field. This deformation cost is in fact a norm often called the Dirichlet energy, and can be used to quantify how far \(\tau \) is from the translation group.

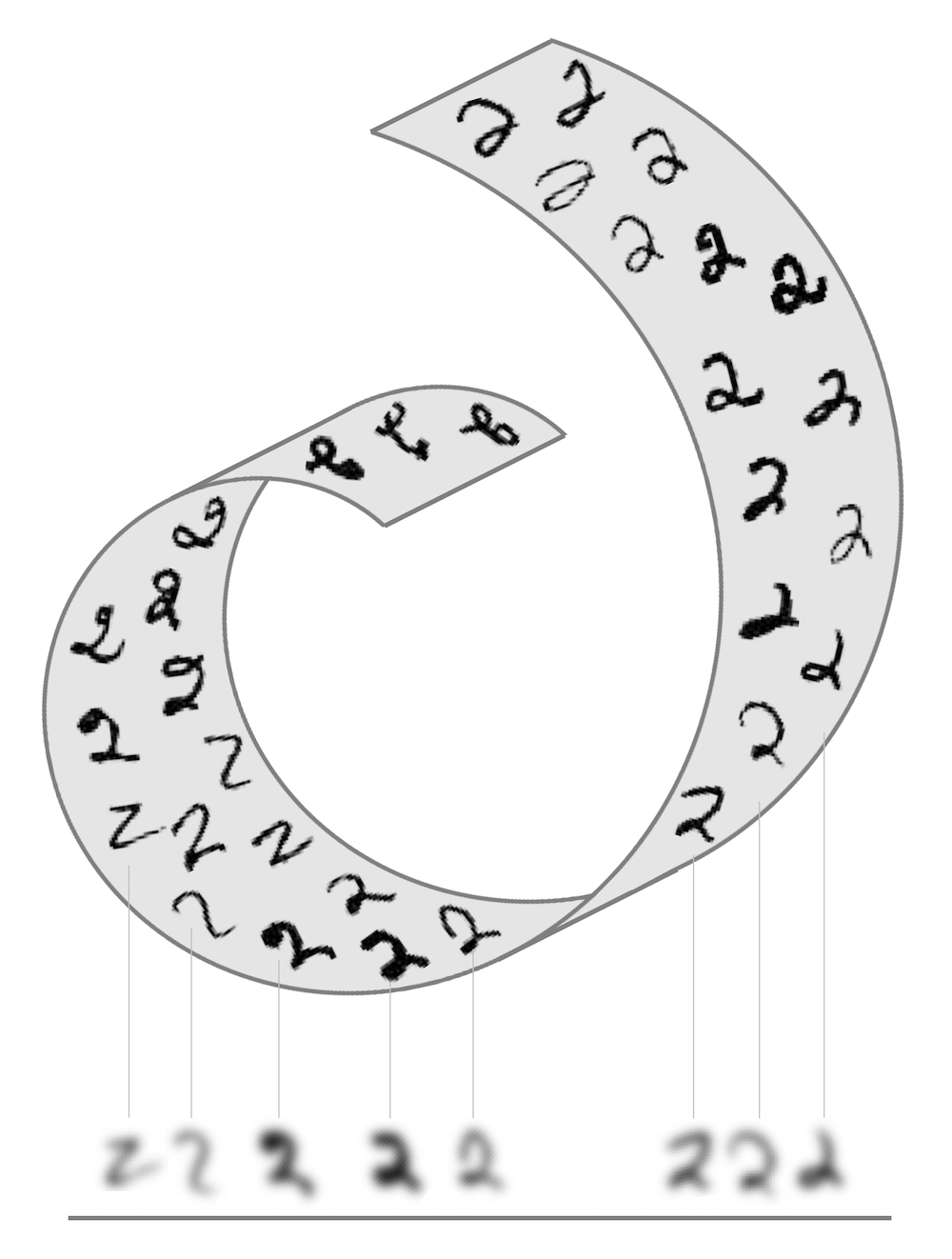

Figure 6: The set of all bijective mappings from \(\Omega \) into itself forms the set automorphism group \(\mathrm {Aut}(\Omega )\), of which a symmetry group \(\fG \) (shown as a circle) is a subgroup. Geometric Stability extends the notion of \(\fG \)-invariance and equivariance to ‘transformations around \(\fG \)’ (shown as gray ring), quantified in the sense of some metric between transformations. In this example, a smooth distortion of the image is close to a shift.

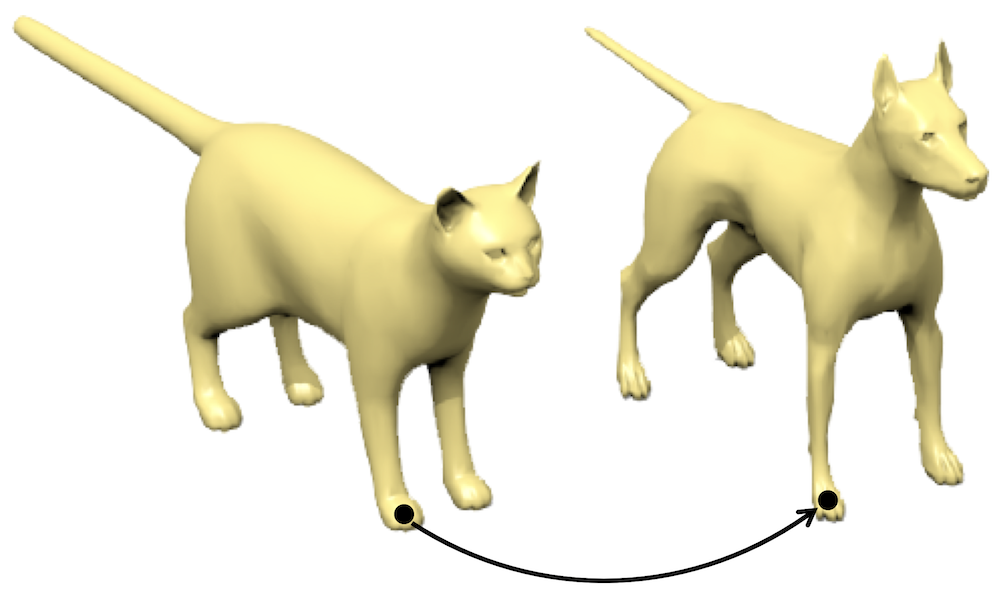

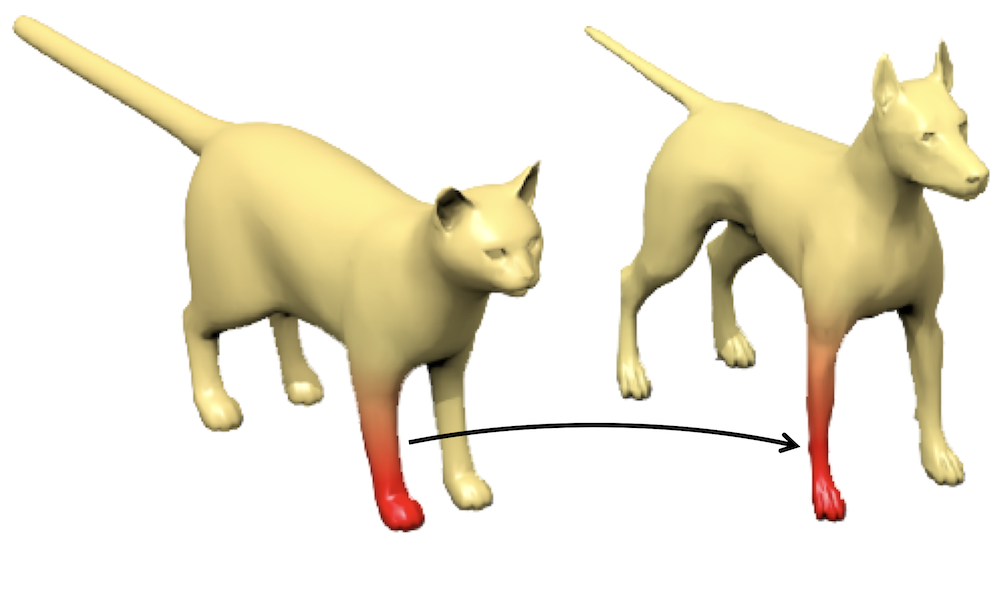

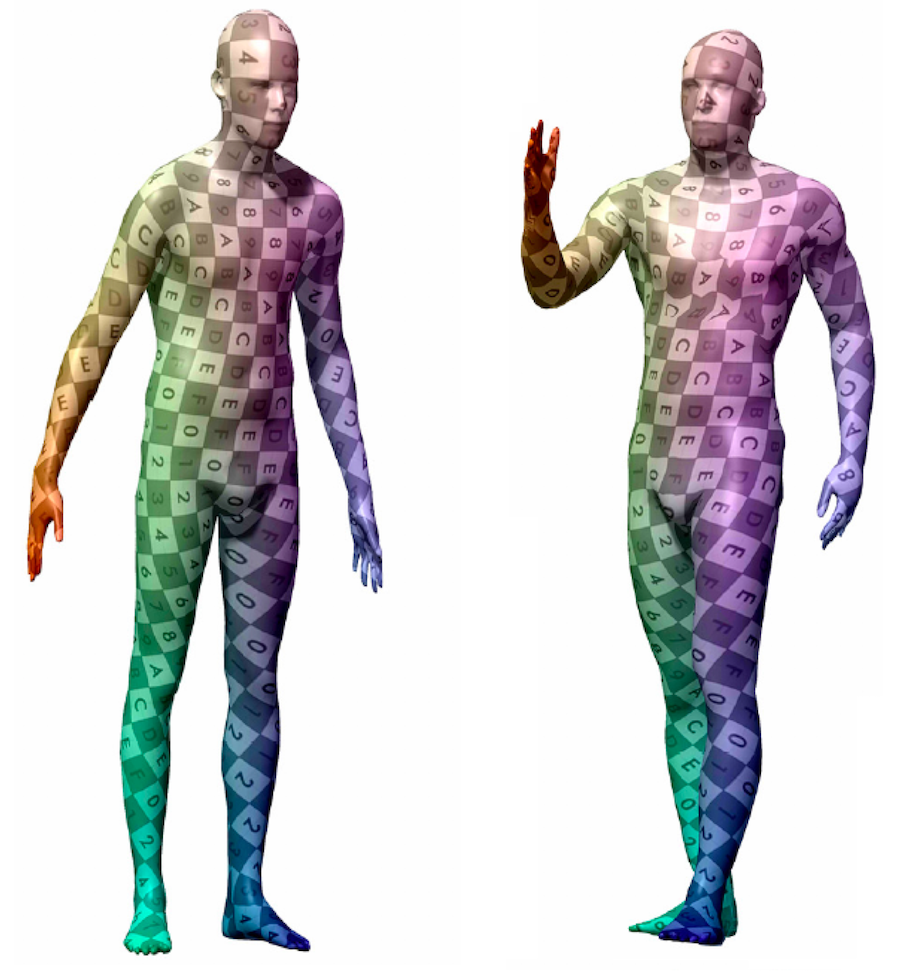

Stability to domain deformations In many applications, the object being deformed is not the signal, but the geometric domain \(\Omega \) itself. Canonical instances of this are applications dealing with graphs and manifolds: a graph can model a social network at different instance of time containing slightly different social relations (follow graph), or a manifold can model a 3D object undergoing non-rigid deformations. This deformation can be quantified as follows. If \(\mathcal {D}\) denotes the space of all possible variable domains (such as the space of all graphs, or the space of Riemannian manifolds), one can define for \(\Omega , \tilde {\Omega } \in \mathcal {D}\) an appropriate metric (‘distance’) \(d(\Omega , \tilde {\Omega })\) satisfying \(d(\Omega ,\tilde {\Omega })=0\) if \(\Omega \) and \(\tilde {\Omega }\) are equivalent in some sense: for example, the graph edit distance vanishes when the graphs are isomorphic, and the Gromov-Hausdorff distance between Riemannian manifolds equipped with geodesic distances vanishes when two manifolds are isometric.The graph edit distance measures the minimal cost of making two graphs isomorphic by a sequences of graph edit operations. The Gromov-Hausdorff distance measures the smallest possible metric distortion of a correspondence between two metric spaces, see ?.

A common construction of such distances between domains relies on some family of invertible mapping \(\eta : \Omega \to \tilde {\Omega }\) that try to ‘align’ the domains in a way that the corresponding structures are best preserved. For example, in the case of graphs or Riemannian manifolds (regarded as metric spaces with the geodesic distance), this alignment can compare pair-wise adjacency or distance structures (\(d\) and \(\tilde {d}\), respectively),

\[d_{\gD }(\Omega , \tilde {\Omega }) = \inf _{\eta \in \fG }\|d - \tilde {d}\circ (\eta \times \eta )\|\]

where \(\fG \) is the group of isomorphisms such as bijections or isometries, and the norm is defined over the product space \(\Omega \times \Omega \). In other words, a distance between elements of \(\Omega ,\tilde {\Omega }\) is ‘lifted’ to a distance between the domains themselves, by accounting for all the possible alignments that preserve the internal structure. Two graphs can be aligned by the Quadratic Assignment Problem (QAP), which considers in its simplest form two graphs \(G,\tilde {G}\) of the same size \(n\), and solves \(\min _{\mathbf {P} \in \Sigma _n} \mathrm {trace}(\mathbf {A P \tilde {A} P}^\top )\), where \(\mathbf {A}, \tilde {\mathbf {A}}\) are the respective adjacency matrices and \(\Sigma _n\) is the group of \(n \times n\) permutation matrices. The graph edit distance can be associated with such QAP (?). Given a signal \(x \in \gX (\Omega )\) and a deformed domain \(\tilde {\Omega }\), one can then consider the deformed signal \(\tilde {x} = x \circ \eta ^{-1} \in \gX (\tilde {\Omega })\).

By slightly abusing the notation, we define \(\gX (\mathcal {D}) = \{ (\gX (\Omega ), \Omega ) \, : \, \Omega \in \mathcal {D} \}\) as the ensemble of possible input signals defined over a varying domain. A function \(f : \gX (\mathcal {D}) \to \gY \) is stable to domain deformations if

\(\seteqnumber{0}{}{4}\)\begin{equation} \| f( x, \Omega ) - f(\tilde {x}, \tilde {\Omega }) \| \leq C \|x \| d_{\gD }(\Omega , \tilde {\Omega })~ \label {eqn:domain_def_stability} \end{equation}

for all \(\Omega , \tilde {\Omega } \in \mathcal {D}\), and \(x \in \mathcal {X}(\Omega )\). We will discuss this notion of stability in the context of manifolds in Sections 4.4–4.6, where isometric deformations play a crucial role. Furthermore, it can be shown that the stability to domain deformations is a natural generalisation of the stability to signal deformations, by viewing the latter in terms of deformations of the volume form ?.

3.4 Scale Separation

While deformation stability substantially strengthens the global symmetry priors, it is not sufficient in itself to overcome the curse of dimensionality, in the sense that, informally speaking, there are still “too many" functions that respect (4) as the size of the domain grows. A key insight to overcome this curse is to exploit the multiscale structure of physical tasks. Before describing multiscale representations, we need to introduce the main elements of Fourier transforms, which rely on frequency rather than scale.

Fourier Transform and Global invariants

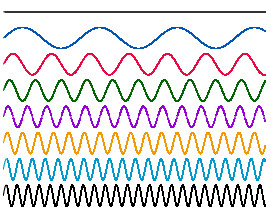

Arguably

Fourier basis functions have global support. As a result, local signals produce energy across all frequencies. the most famous signal decomposition is the Fourier transform, the cornerstone of harmonic analysis. The classical one-dimensional

Fourier transform

Fourier basis functions have global support. As a result, local signals produce energy across all frequencies. the most famous signal decomposition is the Fourier transform, the cornerstone of harmonic analysis. The classical one-dimensional

Fourier transform

\[ \hat {x}(\xi ) = \int _{-\infty }^{+\infty } x(u) e^{-\mi \xi u} \mathrm {d}u \]

expresses the function \(x(u) \in L^2(\Omega )\) on the domain \(\Omega = \mathbb {R}\) as a linear combination of orthogonal oscillating basis functions \(\varphi _\xi (u) = e^{\mi \xi u}\), indexed by their rate of oscillation (or frequency) \(\xi \). Such an organisation into frequencies reveals important information about the signal, e.g. its smoothness and localisation. The Fourier basis itself has a deep geometric foundation and can be interpreted as the natural vibrations of the domain, related to its geometric structure (see e.g. ?).

The Fourier transformIn the following, we will use convolution and (cross-)correlation

\[ (x \, \star \,\theta )(u) = \int _{-\infty }^{+\infty } \hspace {-2mm} x(v)\theta (u+v) \mathrm {d}v \]

interchangeably, as it is common in machine learning: the difference between the two is whether the filter is reflected, and since the filter is typically learnable, the distinction is purely notational. plays a crucial role in signal processing as it offers a

dual formulation of convolution,

\[ (x\star \theta )(u) = \int _{-\infty }^{+\infty } x(v)\theta (u-v) \mathrm {d}v \]

a standard model of linear signal filtering (here and in the following, \(x\) denotes the signal and \(\theta \) the filter). As we will show in the following, the convolution operator is diagonalised in the Fourier basis, making it possible to express convolution as the product of the respective Fourier transforms,

\[ \widehat {(x\star \theta )}(\xi ) = \hat {x}(\xi ) \cdot \hat {\theta }(\xi ), \]

a fact known in signal processing as the Convolution Theorem.

As it turns out, many fundamental differential operators such as the Laplacian are described as convolutions on Euclidean domains. Since such differential operators can be defined intrinsically over very general geometries, this provides a formal procedure to extend Fourier transforms beyond Euclidean domains, including graphs, groups and manifolds. We will discuss this in detail in Section 4.4.

An essential aspect of Fourier transforms is that they reveal global properties of the signal and the domain, such as smoothness or conductance. Such global behavior is convenient in presence of global symmetries of the domain such as translation, but not to study more general diffeomorphisms. This requires a representation that trades off spatial and frequential localisation, as we see next.

Multiscale representations

The notion of local invariance can be articulated by switching from a Fourier frequency-based representation to a scale-based representation, the cornerstone of multi-scale decomposition methods such as wavelets.See ? for a comperehensive introduction. The essential insight of multi-scale methods is to decompose functions defined over the domain \(\Omega \) into elementary functions that are localised both in space and

frequency.

Contrary to Fourier, wavelet atoms are localised and multi-scale, allowing to capture fine details of the signal with atoms having small spatial support and coarse details with atoms having large spatial support. The term atom here is

synonymous with ‘basis element’ in Fourier analysis, with the caveat that wavelets are redundant (over-complete). In the case of wavelets, this is achieved by correlating a translated and dilated filter (mother wavelet) \(\psi \), producing a

combined spatio-frequency representation called a continuous wavelet transform

Contrary to Fourier, wavelet atoms are localised and multi-scale, allowing to capture fine details of the signal with atoms having small spatial support and coarse details with atoms having large spatial support. The term atom here is

synonymous with ‘basis element’ in Fourier analysis, with the caveat that wavelets are redundant (over-complete). In the case of wavelets, this is achieved by correlating a translated and dilated filter (mother wavelet) \(\psi \), producing a

combined spatio-frequency representation called a continuous wavelet transform

\[ (W_\psi x)(u,\xi ) = \xi ^{-1/2} \int _{-\infty }^{+\infty } \psi \left (\frac {v-u}{\xi }\right ) x(v) \mathrm {d}v. \]

The translated and dilated filters are called wavelet atoms; their spatial position and dilation correspond to the coordinates \(u\) and \(\xi \) of the wavelet transform. These coordinates are usually sampled dyadically (\(\xi =2^{-j}\) and \(u = 2^{-j}k\)), with \(j\) referred to as scale. Multi-scale signal representations bring important benefits in terms of capturing regularity properties beyond global smoothness, such as piece-wise smoothness, which made them a popular tool in signal and image processing and numerical analysis in the 90s.

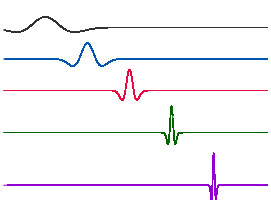

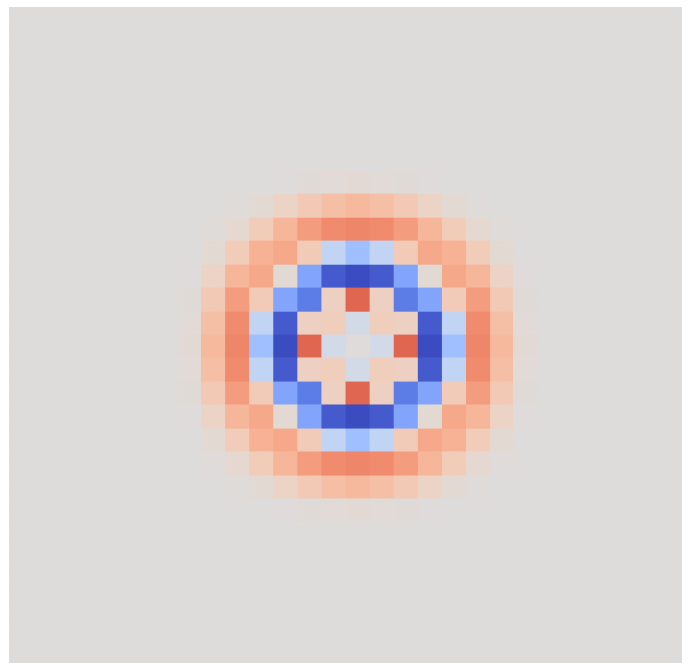

Deformation stability of Multiscale representations: The benefit of multiscale localised wavelet decompositions over Fourier decompositions is revealed when considering the effect of small deformations ‘nearby’ the underlying symmetry group. Let us illustrate this important concept in the Euclidean domain and the translation group. Since the Fourier representation diagonalises the shift operator (which can be thought of as convolution, as we will see in more detail in Section 4.2), it is an efficient representation for translation transformations. However, Fourier decompositions are unstable under high-frequency deformations. In contrast, wavelet decompositions offer a stable representation in such cases.

Indeed, let us consider \(\tau \in \mathrm {Aut}(\Omega )\) and its associated linear representation \(\rho (\tau )\). When \(\tau (u) = u - v\) is a shift, as we will verify in Section 4.2, the operator \(\rho (\tau ) = S_v\) is a shift operator that commutes with convolution. Since convolution operators are diagonalised by the Fourier transform, the action of shift in the frequency domain amounts to shifting the complex phase of the Fourier transform,

\[ (\widehat {S_v x})(\xi ) = e^{-\mi \xi v} \hat {x}(\xi ). \]

Thus, the Fourier modulus \(f(x) = |\hat {x}|\) removing the complex phase is a simple shift-invariant function, \(f(S_v x) = f(x)\). However, if we have only approximate translation, \(\tau (u) = u - \tilde {\tau }(u)\) with \(\|\nabla \tau \|_\infty = \sup _{u\in \Omega } \| \nabla \tilde {\tau }(u)\| \leq \epsilon \), the situation is entirely different: it is possible to show that

\[ \frac {\|f(\rho (\tau ) x) - f(x) \| }{ \|x\| }= \mathcal {O}(1) \]

irrespective of how small \(\epsilon \) is (i.e., how close is \(\tau \) to being a shift). Consequently, such Fourier representation is unstable under deformations, however small. This unstability is manifested in general domains and non-rigid transformations; we will see another instance of this unstability in the analysis of 3d shapes using the natural extension of Fourier transforms described in Section 4.4.

Wavelets offer a remedy to this problem that also reveals the power of multi-scale representations. In the above example, we can show (?) that the wavelet decomposition \(W_\psi x\) is approximately equivariant to deformations,

\( \def\LWRfootnote{0} \) \[ \frac {\| \rho (\tau ) (W_\psi x) - W_\psi (\rho (\tau ) x) \|}{\|x\|} = \mathcal {O}(\epsilon ). \marginnote {This notation implies that $\rho (\tau )$ acts on the spatial coordinate of $(W_\psi x)(u,\xi )$. } \] \( \def\LWRfootnotename{footnote} \)

In other words, decomposing the signal information into scales using localised filters rather than frequencies turns a global unstable representation into a family of locally stable features. Importantly, such measurements at different scales are not yet invariant, and need to be progressively processed towards the low frequencies, hinting at the deep compositional nature of modern neural networks, and captured in our Blueprint for Geometric Deep Learning, presented next.

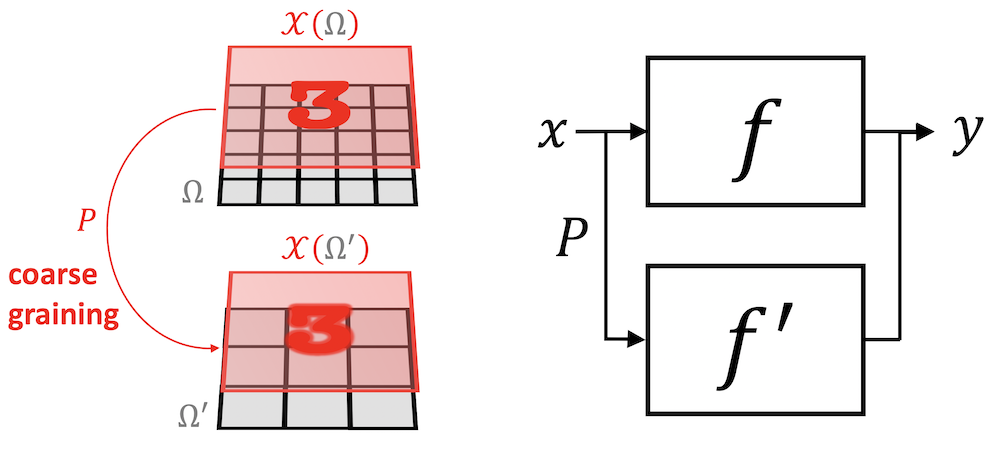

Scale Separation Prior: We can build from this insight by considering a multiscale coarsening of the data domain \(\Omega \) into a hierarchy \(\Omega _1, \hdots , \Omega _J\). As it turns out, such coarsening can be defined on very general domains, including grids, graphs, and manifolds. Informally, a coarsening assimilates nearby points \(u, u' \in \Omega \) together, and thus only requires an appropriate notion of metric in the domain. If \(\gX _{j}(\Omega _j,\mathcal {C}_j) := \{x_j: \Omega _j \to \mathcal {C}_j \}\) denotes signals defined over the coarsened domain \(\Omega _j\), we informally say that a function \(f : \gX (\Omega ) \to \gY \) is locally stable at scale \(j\) if it admits a factorisation of the form \(f \approx f_j \circ P_j \), where \(P_j : \gX (\Omega ) \to \gX _{j}(\Omega _j)\) is a non-linear coarse graining and \(f_j : \gX _{j}(\Omega _j) \to \gY \). In other words, while the target function \(f\) might depend on complex long-range interactions between features over the whole domain, in locally-stable functions it is possible to separate the interactions across scales, by first focusing on localised interactions that are then propagated towards the coarse scales.

Such principlesFast Multipole Method (FMM) is a numerical technique originally developed to speed up the calculation of long-ranged forces in \(n\)-body problems. FMM groups sources that lie close together and treats them as a single source. are of fundamental importance in many areas of physics and mathematics, as manifested for instance in statistical physics in the so-called renormalisation group, or leveraged in important numerical algorithms such as the Fast Multipole Method. In machine learning, multiscale representations and local invariance are the fundamental mathematical principles underpinning the efficiency of Convolutional Neural Networks and Graph Neural Networks and are typically implemented in the form of local pooling. In future work, we will further develop tools from computational harmonic analysis that unify these principles across our geometric domains and will shed light onto the statistical learning benefits of scale separation.

3.5 The Blueprint of Geometric Deep Learning

The geometric principles of Symmetry, Geometric Stability, and Scale Separation discussed in Sections 3.1–3.4 can be combined to provide a universal blueprint for learning stable representations of high-dimensional data. These representations will be produced by functions \(f\) operating on signals \(\mathcal {X}(\Omega ,\mathcal {C})\) defined on the domain \(\Omega \), which is endowed with a symmetry group \(\fG \).

The geometric priors we have described so far do not prescribe a specific architecture for building such representation, but rather a series of necessary conditions. However, they hint at an axiomatic construction that provably satisfies these geometric priors, while ensuring a highly expressive representation that can approximate any target function satisfying such priors.

A simple initial observation is that, in order to obtain a highly expressive representation, we are required to introduce a non-linear element, since if \(f\) is linear and \(\fG \)-invariant, then for all \(x \in \gX (\Omega )\), Here, \(\mu (\fg )\) is known as the Haar measure of the group \(\fG \), and the integral is performed over the entire group.

\[ f(x) = \frac {1}{\mu (\fG )} \int _{\fG } f( \fg . x) \mathrm {d}\mu (\fg ) = f\left (\frac {1}{\mu (\fG )} \int _{\fG } (\fg .x) \mathrm {d}\mu (\fg ) \right ), \]

which indicates that \(F\) only depends on \(x\) through the \(\fG \)-average \(A{x} = \frac {1}{\mu (\fG )} \int _{\fG } (\fg .x) \mathrm {d}\mu (\fg )\). In the case of images and translation, this would entail using only the average RGB color of the input!

While this reasoning shows that the family of linear invariants is not a very rich object, the family of linear equivariants provides a much more powerful tool, since it enables the construction of rich and stable features by composition with appropriate non-linear maps, as we will now explain. Indeed, if \(B: \gX (\Omega , \gC ) \to \gX ( \Omega , \gC ')\) is \(\fG \)-equivariant satisfying \(B(\fg .x) = \fg .B(x)\) for all \(x \in \gX \) and \(\fg \in \fG \), and \(\sigma : \gC ' \to \gC ''\) is an arbitrary (non-linear) map, then we easily verify that the composition \(U := (\bm {\sigma } \circ B): \gX (\Omega , \gC ) \to \gX ( \Omega , \gC '')\) is also \(\fG \)-equivariant, where \(\bm {\sigma }: \gX (\Omega ,\gC ') \to \gX (\Omega , \gC '')\) is the element-wise instantiation of \(\sigma \) given as \((\bm {\sigma }(x))(u) := \sigma ( x(u))\).

This simple property allows us to define a very general family of \(\fG \)-invariants, by composing \(U\) with the group averages \(A \circ U : \gX (\Omega , \gC ) \to \gC ''\). A natural question is thus whether any \(\fG \)-invariant function can be approximated at arbitrary precision by such a model, for appropriate choices of \(B\) and \(\sigma \). It is not hard to adapt the standard Universal Approximation Theorems from unstructured vector inputs to show that shallow ‘geometric’ networks are also universal approximators, by properly generalising the group average to a general non-linear invariant. Such proofs have been demonstrated, for example, for the Deep Sets model by ?. However, as already described in the case of Fourier versus Wavelet invariants, there is a fundamental tension between shallow global invariance and deformation stability. This motivates an alternative representation, which considers instead localised equivariant maps.Meaningful metrics can be defined on grids, graphs, manifolds, and groups. A notable exception are sets, where there is no predefined notion of metric. Assuming that \(\Omega \) is further equipped with a distance metric \(d\), we call an equivariant map \(U\) localised if \((Ux)(u)\) depends only on the values of \(x(v)\) for \(\mathcal {N}_u = \{v : d(u,v) \leq r\}\), for some small radius \(r\); the latter set \(\mathcal {N}_u\) is called the receptive field.

A single layer of local equivariant map \(U\) cannot approximate functions with long-range interactions, but a composition of several local equivariant maps \(U_J \circ U_{J-1} \dots \circ U_1\) increases the receptive fieldThe term ‘receptive field’ originated in the neuroscience literature, referring to the spatial domain that affects the output of a given neuron. while preserving the stability properties of local equivariants. The receptive field is further increased by interleaving downsampling operators that coarsen the domain (again assuming a metric structure), completing the parallel with Multiresolution Analysis (MRA, see e.g. ?).

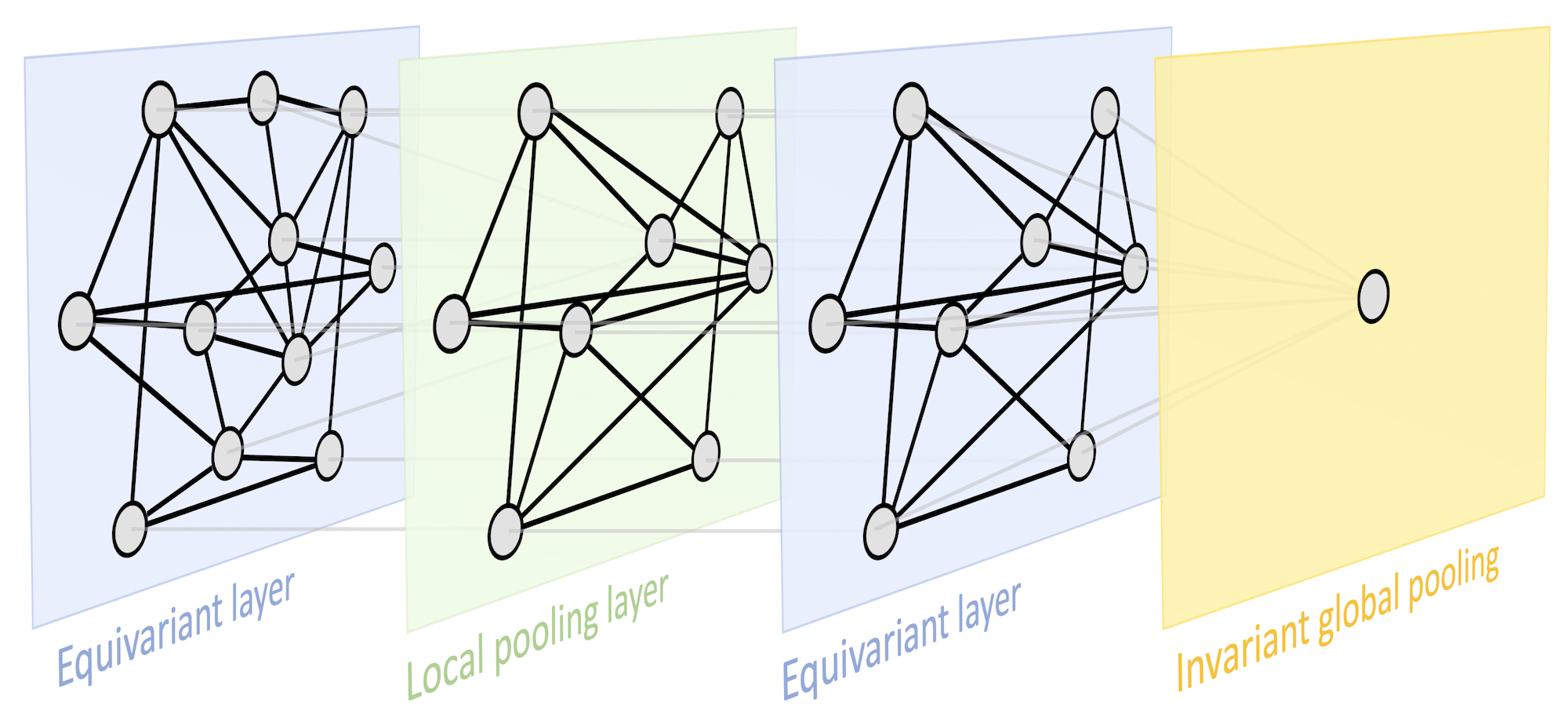

In summary, the geometry of the input domain, with knowledge of an underyling symmetry group, provides three key building blocks: (i) a local equivariant map, (ii) a global invariant map, and (iii) a coarsening operator. These building blocks provide a rich function approximation space with prescribed invariance and stability properties by combining them together in a scheme we refer to as the Geometric Deep Learning Blueprint (Figure 8).

Geometric Deep Learning Blueprint

Let \(\Omega \) and \(\Omega '\) be domains, \(\fG \) a symmetry group over \(\Omega \), and write \(\Omega ' \subseteq \Omega \) if \(\Omega '\) can be considered a compact version of \(\Omega \).

We define the following building blocks:

Linear \(\fG \)-equivariant layer \(B: \gX (\Omega , \gC ) \to \gX ( \Omega ', \gC ')\) satisfying \(B(\fg .x) = \fg .B(x)\) for all \(\fg \in \fG \) and \(x\in \gX (\Omega ,\mathcal {C})\).

Nonlinearity \(\sigma : \gC \to \gC '\) applied element-wise as \((\bm {\sigma }(x))(u) = \sigma ( x(u))\).

Local pooling (coarsening) \(P : \gX (\Omega , \gC ) \rightarrow \gX (\Omega ', \gC ) \), such that \(\Omega '\subseteq \Omega \).

\(\fG \)-invariant layer (global pooling) \(A: \gX (\Omega , \gC ) \rightarrow \mathcal {Y}\) satisfying \(A(\fg .x) = A(x)\) for all \(\fg \in \fG \) and \(x\in \gX (\Omega ,\mathcal {C})\).

Using these blocks allows constructing \(\fG \)-invariant functions \(f:\mathcal {X}(\Omega ,\mathcal {C}) \rightarrow \mathcal {Y}\) of the form

\[ f = A \circ \boldsymbol {\sigma }_J \circ B_J \circ P_{J-1} \circ \hdots \circ P_1 \circ \boldsymbol {\sigma }_1 \circ B_1 \]

where the blocks are selected such that the output space of each block matches the input space of the next one. Different blocks may exploit different choices of symmetry groups \(\fG \).

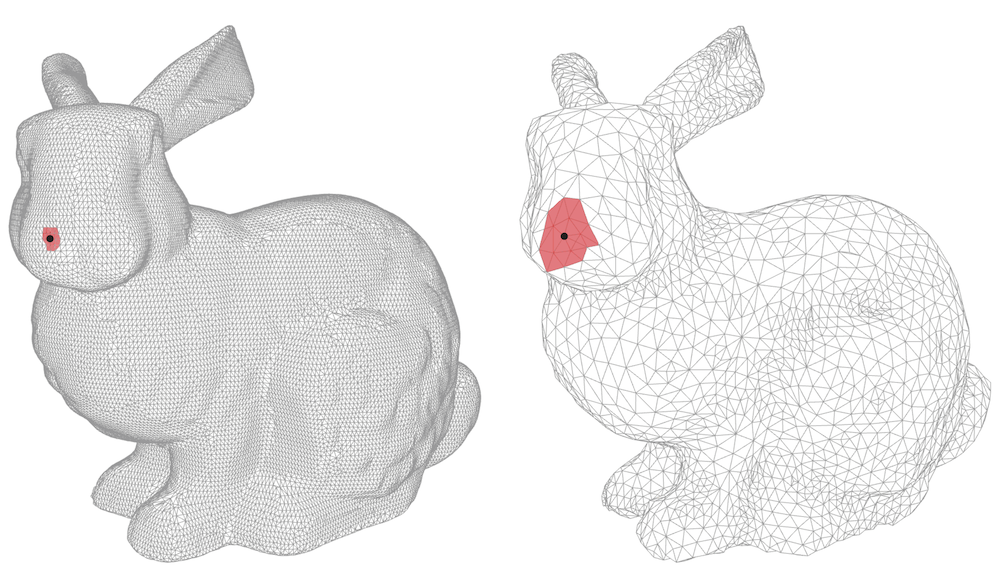

Different settings of Geometric Deep Learning One can make an important distinction between the setting when the domain \(\Omega \) is assumed to be fixed and one is only interested in varying input signals defined on that domain, or the domain is part of the input as varies together with signals defined on it. A classical instance of the former case is encountered in computer vision applications, where images are assumed to be defined on a fixed domain (grid). Graph classification is an example of the latter setting, where both the structure of the graph as well as the signal defined on it (e.g. node features) are important. In the case of varying domain, geometric stability (in the sense of insensitivity to the deformation of \(\Omega \)) plays a crucial role in Geometric Deep Learning architectures.

This blueprint has the right level of generality to be used across a wide range of geometric domains. Different Geometric Deep Learning methods thus differ in their choice of the domain, symmetry group, and the specific implementation details of the aforementioned building blocks. As we will see in the following, a large class of deep learning architectures currently in use fall into this scheme and can thus be derived from common geometric principles.

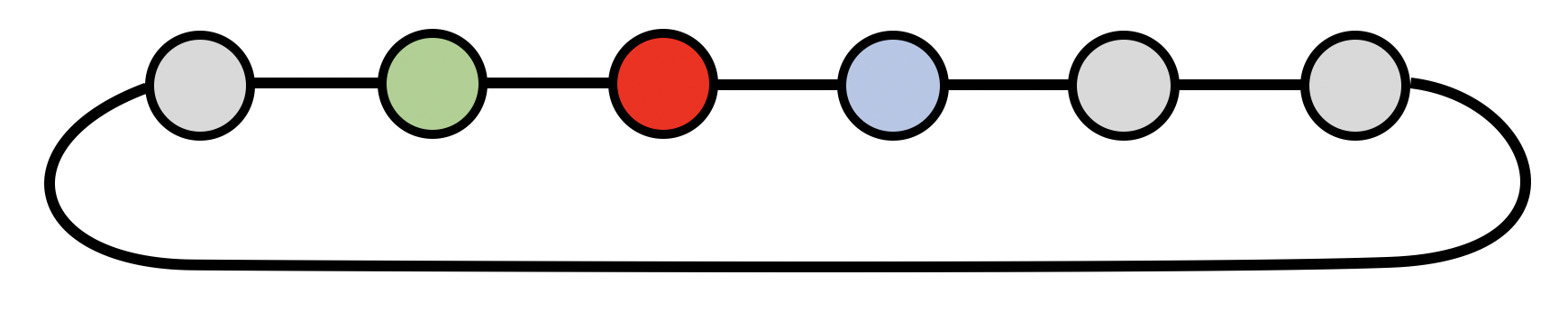

In the following sections (4.1–4.6) we will describe the various geometric domains focusing on the ‘5G’, and in Sections ??–?? the specific implementations of Geometric Deep Learning on these domains.

| Architecture | Domain \(\Omega \) | Symmetry group \(\mathfrak {G}\) |

| CNN | Grid | Translation |

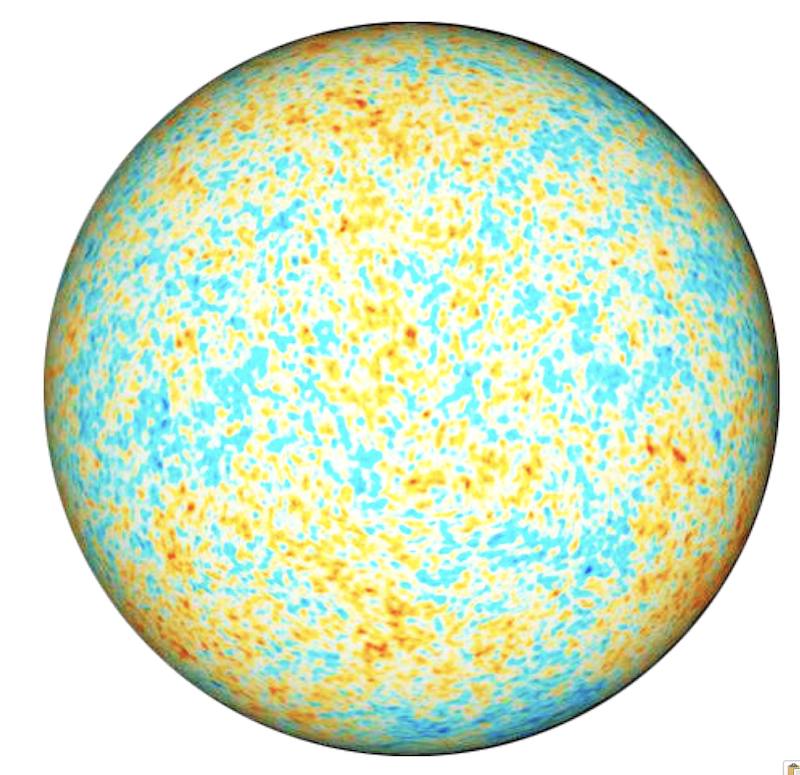

| Spherical CNN | Sphere / \(\mathrm {SO}({3})\) | Rotation \(\mathrm {SO}({3})\) |

| Intrinsic / Mesh CNN | Manifold | Isometry \(\mathrm {Iso}(\Omega )\) / |

| Gauge symmetry \(\mathrm {SO}(2)\) | ||

| GNN | Graph | Permutation \(\Sigma _n\) |

| Deep Sets | Set | Permutation \(\Sigma _n\) |

| Transformer | Complete Graph | Permutation \(\Sigma _n\) |

| LSTM | 1D Grid | Time warping |

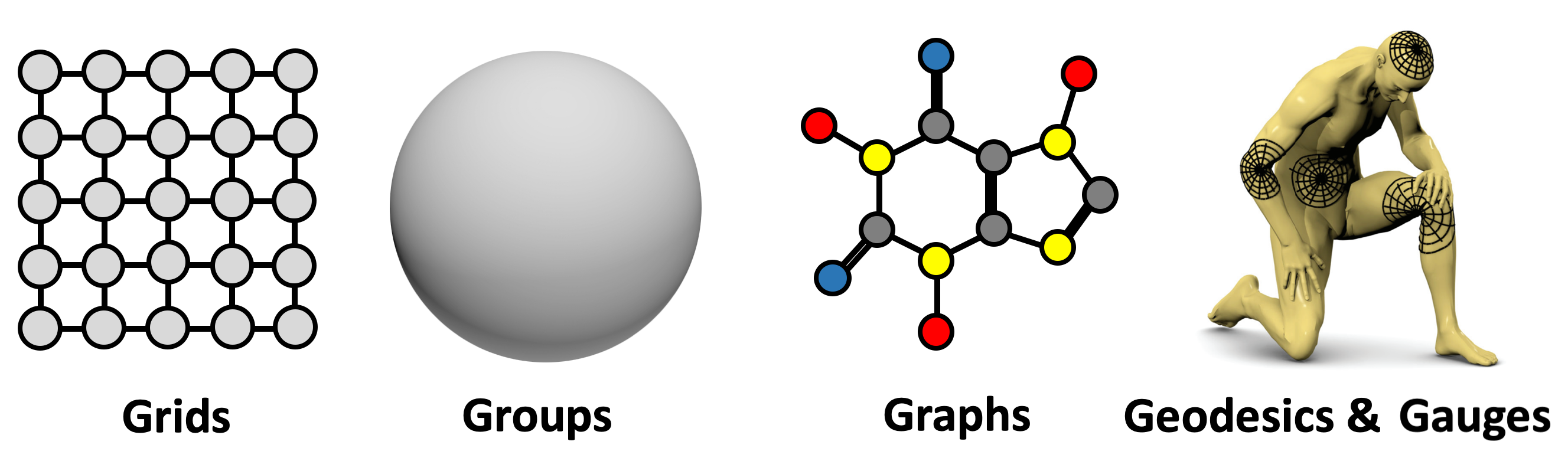

4 Geometric Domains: the 5 Gs

The main focus of our text will be on graphs, grids, groups, geodesics, and gauges. In this context, by ‘groups’ we mean global symmetry transformations in homogeneous space, by ‘geodesics’ metric structures on manifolds, and by ‘gauges’ local reference frames defined on tangent bundles (and vector bundles in general). These notions will be explained in more detail later. In the next sections, we will discuss in detail the main elements in common and the key distinguishing features between these structures and describe the symmetry groups associated with them. Our exposition is not in the order of generality – in fact, grids are particular cases of graphs – but a way to highlight important concepts underlying our Geometric Deep Learning blueprint.

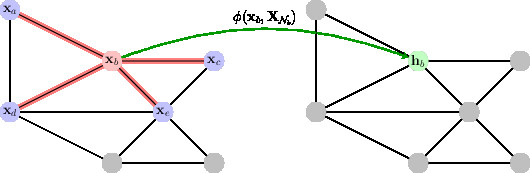

4.1 Graphs and Sets

In multiple branches of science, from sociology to particle physics, graphs are used as models of systems of relations and interactions. From our perspective, graphs give rise to a very basic type of invariance modelled by the group of permutations. Furthermore, other objects of interest to us, such as grids and sets, can be obtained as a particular case of graphs.

A graph \(\gG = (\gV , \gE )\) is a collection of nodesDepending on the application field, nodes may also be called vertices, and edges are often referred to as links or relations. We

will use these terms interchangeably. \(\gV \) and edges \(\gE \subseteq \gV \times \gV \) between pairs of nodes. For the purpose of the following discussion, we will further assume the nodes to be endowed with \(s\)-dimensional node

features, denoted by \(\mathbf {x}_u\) for all \(u \in \gV \). Social networks are perhaps among the most commonly studied examples of graphs, where nodes represent users, edges correspond to friendship relations between them, and node features

model user properties such as age, profile picture, etc. It is also often possible to endow the edges, or entire graphs, with features;

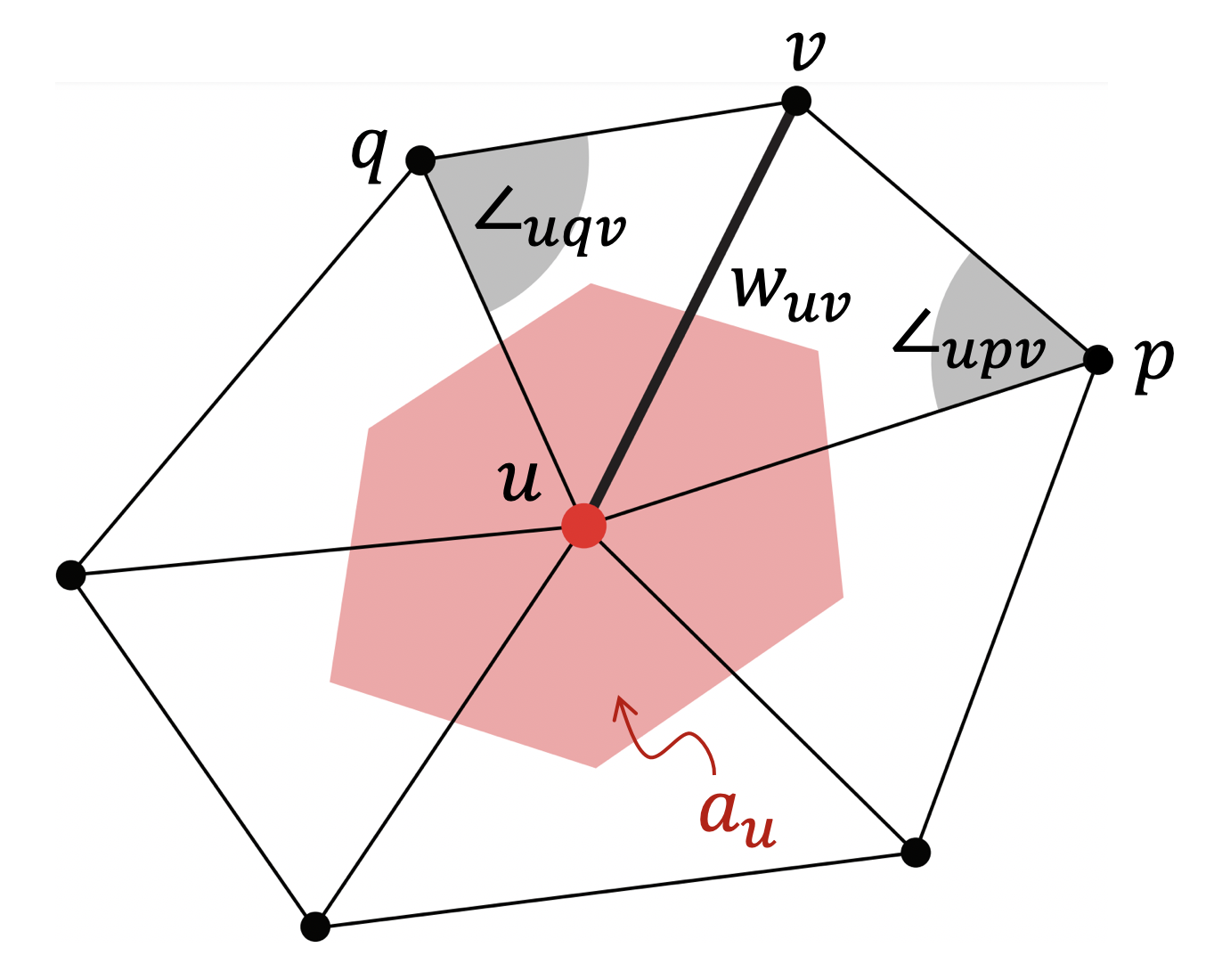

Isomorphism is an edge-preserving bijection between two graphs. Two isomorphic graphs shown here are identical up to reordering of their nodes. but as this does not alter the main findings of this section, we will defer discussing it to future

work.

Isomorphism is an edge-preserving bijection between two graphs. Two isomorphic graphs shown here are identical up to reordering of their nodes. but as this does not alter the main findings of this section, we will defer discussing it to future

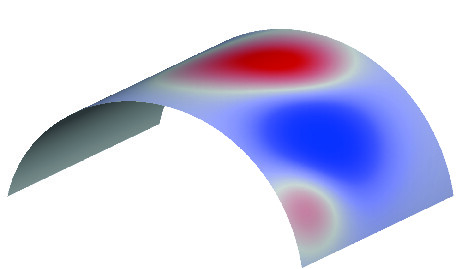

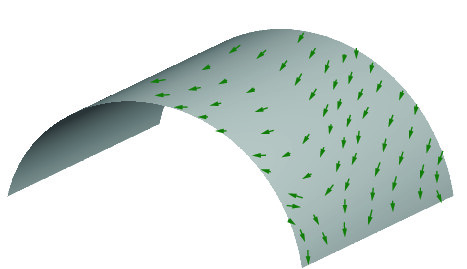

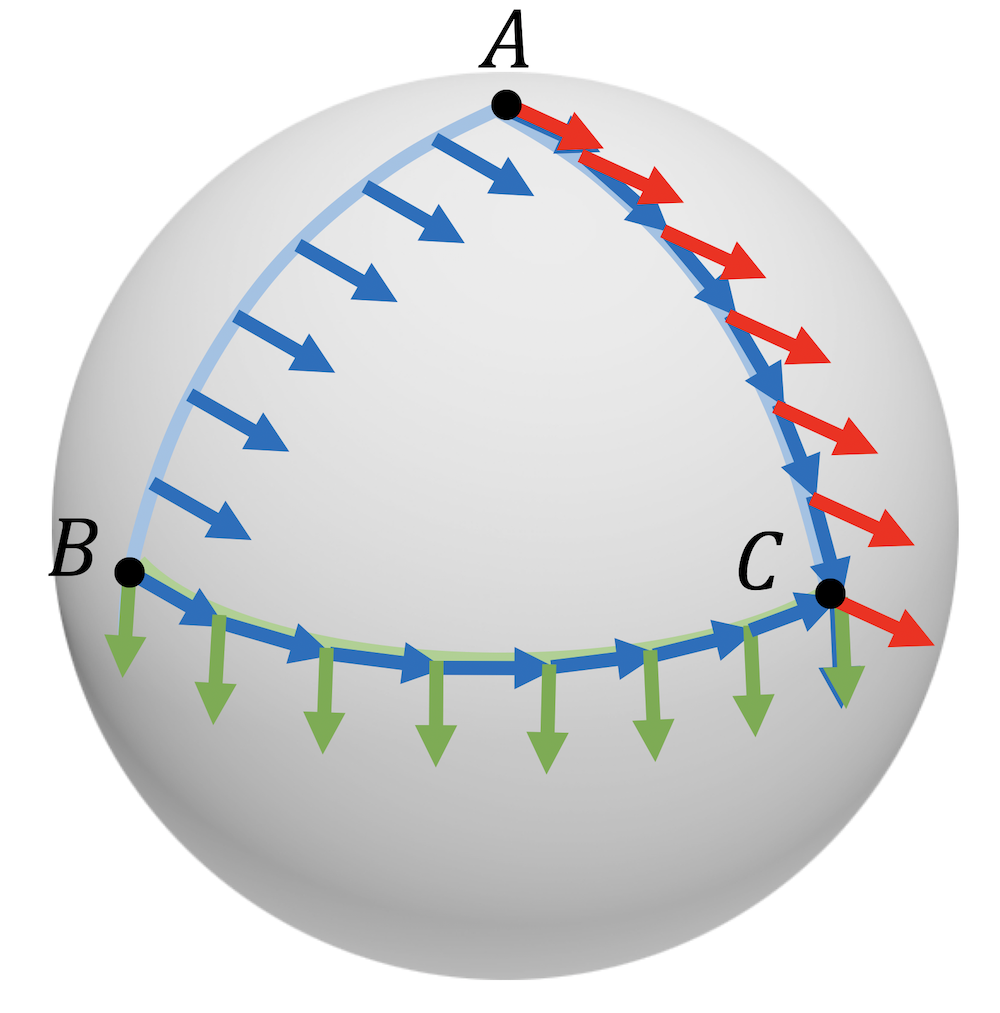

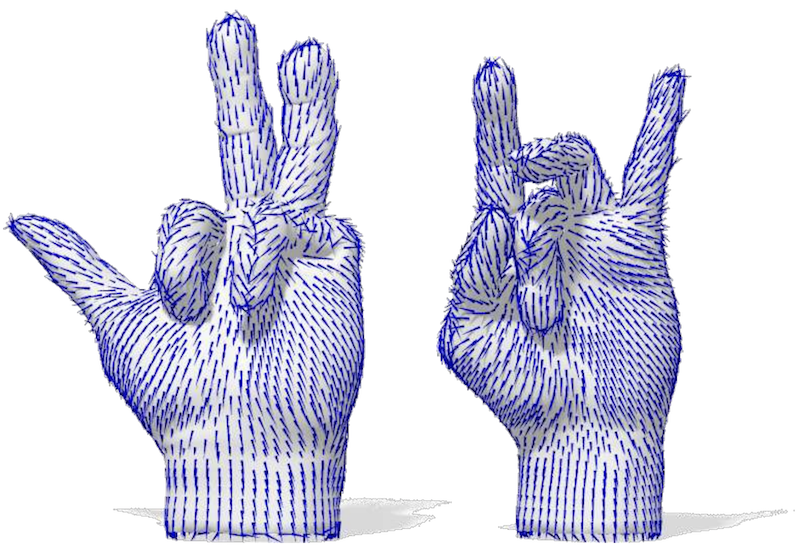

work.